The web has been made for humans. A website's design ideally guides users towards a specified goal without friction. In reality, HCI1 principles and UI/UX best practices are often neglected. Universal accessibility, moreover, represents an incidental concern. Guides, whether static or dynamic, have been an orthogonal approach to help users around friction. For instance, hardcoded on-page tutorials that sequentially highlight interactive elements and explain what they do. Guides for progressively developing UIs are high maintenance, and not least subject to implementation and deployment effort.

Major advances in AI have rendered AI agents for the web a desired means of UI/UX enhancement: A user gets stuck on a product page? An intelligent agent will pick them up and gently guide them through a purchase. Other than static usability guides, agentic guidance draws context from the current web page, and a manageable set of instructions.

Check out our gentle introduction to AI agents for the web to get a general idea about web agents.

Develop a Non-Invasive Agent with Webfuse

Webfuse kickstarts development of individual web agents. It is a service that seamlessly serves any website through a low-latency proxy layer which augments the original website with custom Extensions. Powerful Extension APIs and instant deployment on a global infrastructure allow you – or an outside consultant – to develop fully encapsulated agents, independent from the underlying website's source.

Herein, we'll develop a simple assistant agent with Webfuse. Our agent will be able to answer questions about the currently browsed page, and possibly suggest a reasonable interaction. To showcase Webfuse's capabilities, our agent will work on any website. In production, agents can be limited to and refined for certain host or page environments.

Deploy Web Agents with Webfuse

Develop or deploy web agents in minutes; serve agent-enhanced websites through an isolated application layer.

Step 1: Set up an Extension Project

Any Extension loaded in a Webfuse Session – the proxy layer – corresponds to a modular project. Webfuse Extensions, in fact, highly resemble the browser extension methodology. That said, we first set up a Webfuse Extension project. Since web agents represent a common Webfuse use case, there are a handful of agent project templates. We'll start from the minimal agent blueprint template repository.

To get the most out of this tutorial, it is helpful to gain a basic understanding about Webfuse and Extensions first.

The agent blueprint project uses the Labs framework to manage, bundle, and preview Extension files.

You can find the final assistant agent result in a dedicated repository.

The blueprint integrates with the OpenAI API, declaring a GPT agent backend. First and foremost, create an .env file in the project root directory and state your OpenAI API key and a model identifier:

OPENAI_API_KEY=sk-proj-abcdef-012345

MODEL_NAME=gpt-4o

The blueprint project is premised on abstract classes. To integrate with a different LLM API, simply extend the

LLMAdapterclass.

The project directory contains a bunch of Extension files. Essentially, each Extension component relates to a directory in the /src directory:

├─ /src

│ ├─ /background

│ │ ├─ /agent

│ │ │ ├─ Agent.ts

│ │ │ ├─ LLMAdapter.ts

│ │ │ └─ schema.ts

│ │ ├─ background.ts

│ │ └─ SYSTEM_PROMPT.md

│ ├─ /content

│ │ ├─ /augmentation

│ │ │ ├─ content.css

│ │ │ └─ content.html

│ │ ├─ Snapshot.ts

│ │ └─ content.ts

│ ├─ /newtab

│ ├─ /popup

│ └─ /shared

│ ├─ shared.css

│ └─ util.ts

├─ package.json

└─ .env

/newtab

The newtab component corresponds to a web page loaded within every new tab. It will provide a simple form to navigate our agent to any website via URL.

/background

The background component runs persistently in a Session across tabs and page navigation. It will comprise our agent logic, and potentially context memory. Besides, it will wrap an agent-specific LLM communication protocol.

/content

The content component loads within each tab, after each navigation. This is, it refreshes with each new window scope. It will manage the agent UI, and communicate with the background component. Files in the /augmentation subdirectory describe the agent UI augmenting the page's actual content.

/popup

The popup component is a persistent, toggleable UI modal. It can be considered the visual counterpart of the background script. Content (augmentation) UI and popup UI are two alternative approaches for Extension UI. They differ in scope and lifetime. Since our agent will be tied to individual pages, our UI can be bound to single-page content. Agents that require a global UI across tabs and page content need to use a popup UI instead.

Step 2: Write An Agent System Prompt

Depending on the specific backend model, LLM-driven agents draw from a spectrum of commonsense. We can, however, leverage our agent's utility through a concise system prompt. A system prompt, encoded in the file SYSTEM_PROMPT.md frames its behaviour for a specific task and problem domain. Our task is providing assistance, our problem domain comprises web pages. Do not hesitate to tweak the example prompt to your needs.

Assign an Agentic Role

## Identity

You are an AI agent that helps human users interacting with website pages. Your goal is to answer questions about a certain web page. Keep a polite and serious tone throughout the conversation.

Define the Model Input

## Input

The user provides you with the serialized DOM of a web page to be described. Subsequently, the user states their question related to that web page.

Define the Model Output

## Output

Respond with a precise answer to the user's question regarding the given web page. Respond only with a JSON that contains up to two properties: Alwyas include the property `assistance` which has a value that describes the web page. Use the serialized DOM of the web page as aground truth, but also draw from adjacent facts you already know. Optionally provide the property `interactionPrompt` which has an object value that represents a possible interaction in the web page related to the user's question. If the question does not relate to a very reasonable interaction, omit the interaction prompt property. The value object contains the following properties: `action` corresponds to a user interaction code (e.g., `click`). `selector` is the CSS selector of the interaction target element, e.g., `[data-uid="12"]`. Only consider elements that have the data attribute `data-uid`, and also only provide the CSS selector based on that data attribute as it is definitely unique. And `message` parahprases the interaction as a request in order to allow the user decide whether to actually perform the action, e.g., `Do you want to see the FAQs?`.

**Important:** If you can not provide a helpful answer, do not hesitate to let the user know, e.g., `Sorry, the question is to broad. Could you please phrase you question in a more precise way?`

Provide Communication Examples

## Example

<user_query>

<html>

<head>

<title>Standings | F1</title>

</head>

<body>

<h1>Current Drivers' Standings</h1>

<ol>

<li data-id="0">1. Oscar Piastri</li>

<li data-id="1">2. Lando Norris</li>

<li data-id="2">3. Max Verstappen</li>

</ol>

<a href="/full-standings" data-id="3">Full standings</a>

</body>

</html>

</user_query>

<user_query>

Who is currently leading in the formula one?

</user_query>

<assistant_response>

``` json

{

"assistance": "The current leader of Formula 1 racing competition is the Australian driver Oscar Piastri.",

"interactionPrompt": {

"action": "click",

"selector": "[data-uid=\"3\"]",

"message": "Do you want to see the full list of standings?"

}

}

```

</assistant_response>

Step 3: Constrain LLM Backend Responses

We already suggested an LLM response message shape in the system prompt. To actually constrain it to a certain shape, the OpenAI API allows us to specify a schema via zod. Let's define a response schema around an assistance message (e.g., “The current leader of Formula 1...”), and optionally a suggested interaction (e.g., click full standings link). The suggested interaction syntactically corresponds to an action type, an action target element CSS selector, and an interaction prompt message. This message is important: first ask the user if they want to have the interaction performed – a best practice of agentic UX.

import { z } from "zod";

export const ResponseSchema = z.object({

assistance: z.string(),

interactionPrompt: z.object({

action: z.enum([

"click" // extensible

]),

selector: z.string(),

message: z.string()

}).nullable()

});

For our example, we define only a click action. The enumeration, however, can easily be extended with other actions available on the Webfuse Automation API, such as scroll.

Interaction is targeted via a unique CSS selector. To rely on selectors – even after DOM preprocessing, such as downsampling – we assign every interactive element in the DOM a unique

data-idattribute upon document load.

Step 4: Take a Web Page Snapshot

A web page represents an instance of our agent's problem domain, and hence key LLM context. Serialisation of a web page for LLMs is known as a snapshot. There have been different techniques to take web page snapshots. We benefit from the Webfuse API, which provides us with the powerful DOM snapshot method. What it does: it stringifies the latest state of the currently browsed page's DOM. By, furthermore, specifying the modifier downsample, we have the DOM snapshot reduced in size – with respect to relevant UI features.

// Take aweb page snapshot via the Webfuse Automation API

const snapshot = browser.webfuseSession

.automation

.take_dom_snapshot({

rootSelector: "body",

modifier: "downsample"

});

Read DOM Snapshots vs Screenshots as Web Agent Context to learn more about web page snapshots.

Read DOM Downsampling for LLM-Based Web Agents to dive deep with optimised snapshot techniques.

Step 5: Implement Agent Augmentation

As of now, we have all the vital agent parts ready: an LLM communication protocol, web page snapshot capabilities, and an augmenting agent UI. In the final development step, we need to capture input, trigger the agent cycle, and visualise output. All of this is tied to the currently browsed window scope. That is, we'll work on the content component.

<section id="stage-1 active">

<p class="feedback"></p>

<button id="audio" onclick="toggleRecord()">Toggle Microphone</button>

</section>

<section id="stage-2">

<p class="feedback"></p>

<button type="button" onclick="reset()">Act</button>

</section>

<section id="stage-3">

<p class="feedback"></p>

<button type="button" onclick="performPendingInteraction()">Submit</button>

</section>

We use a UI that evolves across different stages: 1. capture input, 2. display assistance message, and 3. display interaction prompt.

/**

* Update the stage-based augmentation UI.

* Hide the current stage section element, activate the new stage section element.

* Update the feeback message.

*/

async function feedbackAugmentation(stage: "1" | "2" | "3", text: string) {

const feedbackSection = window.AUGMENTATION

.querySelector(`section#stage-${stage}`);

window.AUGMENTATION.querySelector("section.active")

.classList

.remove("active");

feedbackSection

.classList

.add("active");

feedbackSection

.querySelector(".feedback")

.textContent = text.slice(0, index);

await new Promise(resolve => setTImeout(resolve, 200));

}

/**

* Consult the agent, i.e., trigger an agent question-and-answer cycle.

* A call dispatches a message to the background component.

* A call result will arrive to the message handler as 'agency-response'.

*/

async function consultAgent(question: string) {

browser.runtime

.sendMessage({

target: "background",

cmd: "agency-request",

data: {

snapshot: await snapshot,

question

} as TAgentMessage

});

}

/**

* Record a question by speech data.

* Speech recognition uses the limited browser SpeechRecognition API:

* https://developer.mozilla.org/en-US/docs/Web/API/SpeechRecognition

* Consider an alternative API, and also fallback means of input.

*/

let recognition;

let isRecording = false;

let recordTimeout;

window.toggleRecord = async function() {

clearTimeout(recordTimeout);

window.AUGMENTATION

.querySelector("#audio")

.classList

.toggle("active");

isRecording = !isRecording;

if(!isRecording) {

recognition.stop();

return;

}

recognition = new SpeechRecognition();

recognition.continuous = true;

recognition.interimResults = false;

recognition.lang = "en-US";

recognition.onresult = async e => {

const transcript = Array.from(e.results)

.map(result => result[0].transcript)

.join("");

consultAgent(transcript);

};

recognition.start();

recordTimeout = setTimeout(() => window.toggleRecord(), 10000);

}

/**

* Drive the suggested interaction via the Webfuse Automation API.

* The latest suggested interaction is recevied in the message handler.

* The latest suggested interaction is stored as [pendingInteraction].

*/

window.performPendingInteraction = async function() {

switch(pendingInteraction.action) {

case "click":

await browser.webfuseSession.automation.left_click(pendingInteraction.selector);

break;

}

}

Step 6: Set up Extension Component Communication

By design, for different scopes and lifetimes, all Extension components are encapsulated from each other. The browser communication API grants a single interface for cross-component communication. We set up a simple message flow to trigger an agent (question-and-answer) cycle:

- Listen for agency requests in the background component.

- Listen for agency response in the content component.

// background.ts

browser.runtime.onMessage

.addListener(async (message: TAgentMessage) => {

if(message.target !== "background") return;

switch(message.cmd) {

case "agency-request": {

const data: TResponseSchema = await agent.consult(

message.data?.snapshot,

message.data?.question

);

browser.tabs

.sendMessage(0, {

target: "content",

cmd: "agency-response",

data

});

}

}

});

// content.ts

let pendingInteraction;

browser.runtime.onMessage

.addListener(async message => {

if(message.target !== "content") return;

switch(message.cmd) {

case "agency-response": {

await feedbackAugmentation("2", message.data?.assistance);

pendingInteraction = message.data?.interactionPrompt;

pendingInteraction

&& setTimeout(() => {

if(!document.querySelector(pendingInteraction.selector)) return;

feedbackAugmentation("3", pendingInteraction.message);

}, 2000);

break;

}

}

});

Step 7: Deploy Agent with Webfuse

We highlighted all relevant aspects of developing an agent Extension for Webfuse. You can find our final, ready-to-install Extension in a dedicated repository. Last but not least, let's build and install our Extension to see it in action.

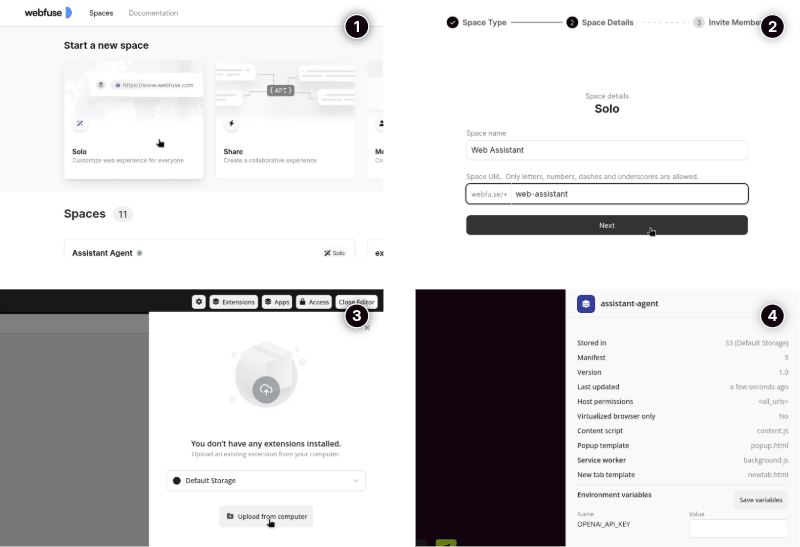

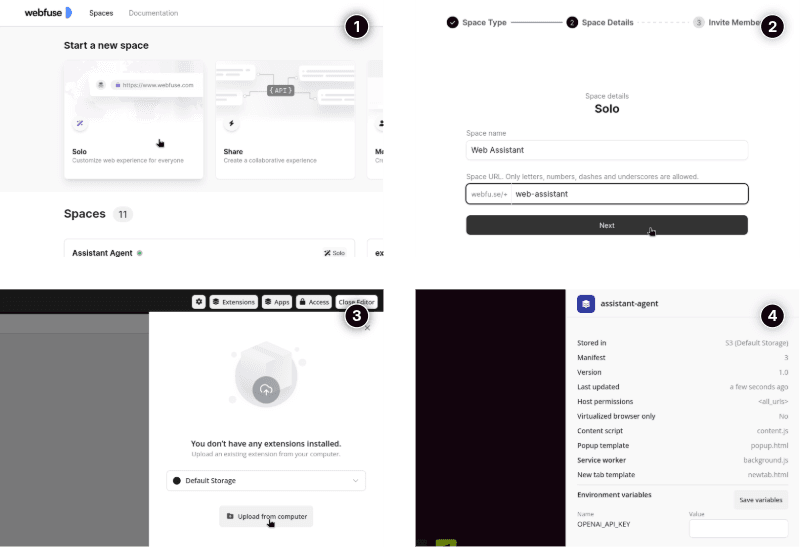

If you have not yet signed up to Webfuse, sign up for an account. Using the Studio dashboard, create a Solo Space (a basic web application proxy layer).

Build Your Agent Extension

npm install

npm run bundle

To prototype the agent Extension's UI, use the

npm run bundle:debugcommand, which spins up a local preview environment.

Upload Your Extension to the Webfuse Space

Open a Session of the previously created Space. Use the Extensions tab beneath the toolbar to upload the bundled Extension directory, which was emitted as /dist.

If you have trouble uploading your agent Extension, have a look at the Official Docs.

Share Your Agent-Enhanced Website

To use your agent, navigate to the Space URL, which you had to specify upon Space creation (something like https://webfuse.com/+web-assistant).

For a seamless user experience, we recommend hiding the Session UI in the Session settings. If you intend to use the agent on a specific website, specify an according start page URL. In a production environment, you could wrap the Webfuse Space URL with a URL under your domain (e.g., best-insurance.com/assisted/)

Best Practices

- Move the LLM adapter (

LLMAdapter) behind a server endpoint to keep the LLM API authentication key secret. - Only serve the agent-enhanced website URL to novice users or once you detect friction.

- Integrate with existing AI APIs, such as for speech recognition or text-to-speech, to build with the state-of-the-art.

Helpful Resources

- Agent Blueprint repository

- Assistant Agent repository

- About Automation with Webfuse

- Webfuse Automation API

- Webfuse Labs – A framework that facilitates web extension development

Footnotes

- HCI stands for Human Computer Interaction. It is the scientific field that concerns with how humans interact with computer systems, and how to design effective, efficient, and satisfactory human-centric computer interfaces. ↩

Ready to Get Started?

14-day free trial

Stay Updated

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.