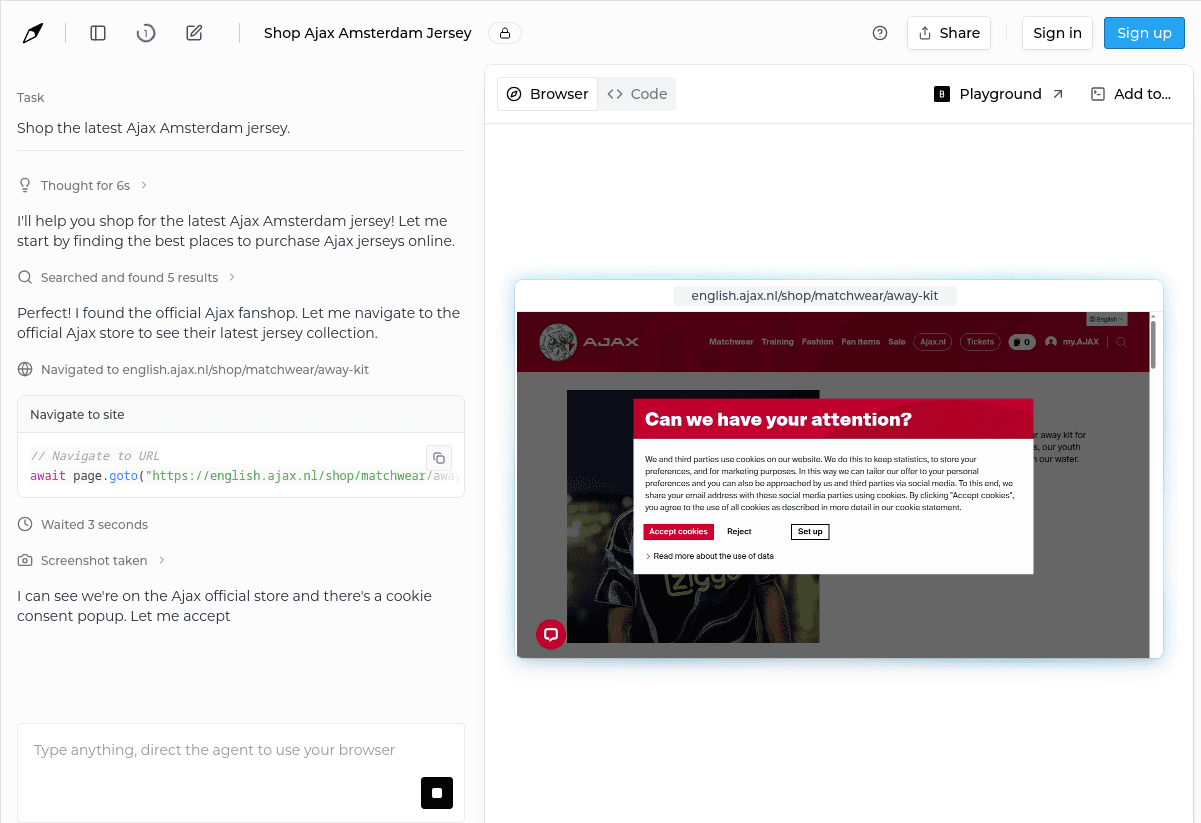

In no time, AI became a natural part of modern web interfaces. AI agents for the web enjoy a recent hype, sparked by the means of Operator (OpenAI), Director (Browserbase), and Browser Use. By now, it is within reach to automate arbitrary web-based tasks, such as booking the cheapest flight from Berlin to Amsterdam.

What is a Web Agent?

For starters, let us break down the term web AI agent: An agent is an entity that autonomously acts on behalf of another entity. An artificially intelligent agent is an application that acts on behalf of a human. In contrast to non-AI computer agents, it solves complex tasks with at least human-grade effectiveness and efficiency. For a human-centric web, web agents have deliberately been designed to browse the web in a human fashion – through UIs rather than APIs.

The Role of Frontier LLMs

Web agents have been a vague desire for a long time. AI agents used to rely on complete models of a problem domain in order to allow (heuristic) search through problem states. Such models would comprise the problem world (e.g., a chessboard), actors (pawns, rooks, etc.), possible actions per actor (rook moves straight), and constraints (i.a., max one piece per field). A heterogeneous space of web application UIs describes the problem domain of a web agent: how to understand a web page, and how to interact with it to solve the declared task?

Frontier LLMs disrupted the AI agent world: explicit problem domain models beyond feasibility can now be replaced by an LLM. The LLM thereby acts as an instantaneous domain model backend that can be consulted with twofold context: serialised problem state, such as a chess position code (“... e4 e5 2. Nc3 f5”), and the respective task (“What is the best move for white?”). For web agents, problem state corresponds to the currently browsed web application's runtime state, for instance, a screenshot.

Generalist Web Agents

Generalist web agents are supposed to solve arbitrary tasks through a web browser. Web-based tasks can be as diverse as “Find a picture of a cat.”, and “Book the cheapest flight from Berlin to Amsterdam tomorrow afternoon (business class, window seat).” In reality, generalist agents still fail uncommon or too precise tasks. While they have been critically acclaimed, they mainly act as early proofs-of-concept. Tasks that are indeed solvable with a generalist agent promise great results with an according specialist agent.

Specialist Web Agents

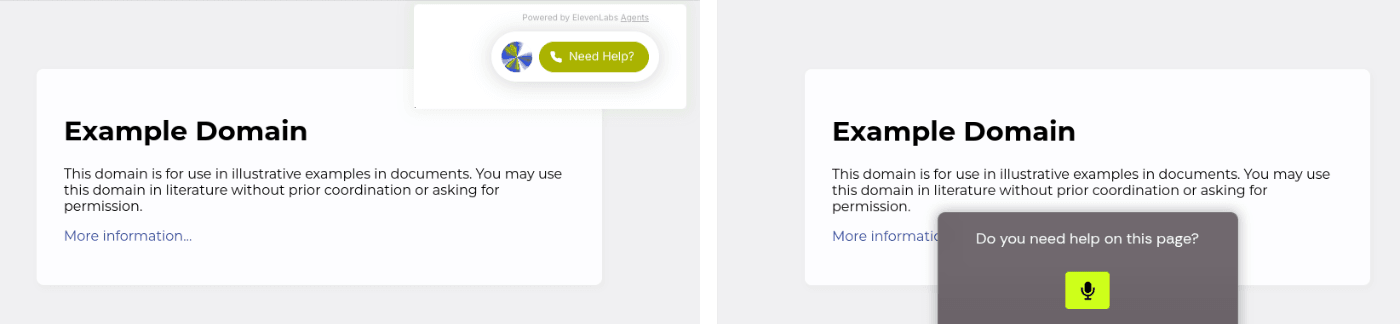

Other than generalist agents, specialist web agents are constrained to a certain task and application domain. Specialist agents bear the major share of commercial value. Most prominently, modal chat agents that provide users with on-page help. Picture a little floating widget that can be chatted to via text or voice input. In most cases, in fact, the term web (AI) agent refers to chat agents. Chat agents – text or voice – can be implemented on top of virtually any existing website. Frontier LLMs provide a lot of commonsense out-of-the-box. A system prompt can, moreover, be leveraged to drive specialist agent quality for the respective problem domain.

How Does a Web Agent Work?

LLM-based web agents are premised on a more or less uniform architecture. The agent application embodies a mediator between a web browser (environment), and the LLM backend (model).

The Agent Lifecycle

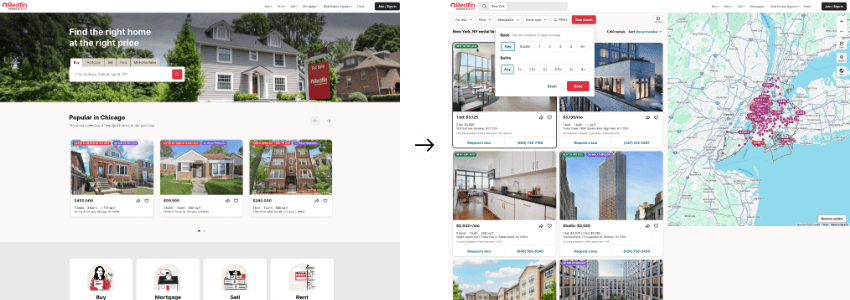

To reduce a user's cognitive load, solving a web-based task is usually chunked into a sequence of UI states. Consider looking for rental apartments on redfin.com: In the first step, you specify a location. Only subsequently are you provided with a grid of available apartments for that location.

Web agent logic is iterative; not least for a sequential web interaction model, but also for a conversational agent interaction model. Browsing the web, human and computer agents represent users alike. That said, Norman's well-known Seven Stages of Action, which hierarchically model the human cognition cycle, transfer to the web agent lifecycle. For each UI state in a web browser (environment) and web-based task (action intention); decide where to click, type, etc. (action planning), and perform those clicks, etc. (action execution). Afterwards, perceive, interpret, and evaluate the results of those actions in the web browser (state). As long as there is a mismatch between the evaluated state and the declared goal state, repeat that cycle. Potentially prompt the user with more required information.

Web Context for LLMs

The gap from an agent towards the environment, according to Seven Stages of Action, is known as the gulf of execution. In real-world scenarios, how to act in the environment in respect to a planned sequence of actions might be difficult (e.g., how to actually open the trunk of a new car?). Arguably, web agents face a novel gulf of intention towards the action planning stage: how to serialise a currently browsed web page's runtime state for LLMs? Snapshot is a more comprehensive term to describe the serialisation of a web page's current runtime state. Screenshots, for instance, represent a type of snapshot that closely resembles how humans perceive a web page at a given point in time. But are they as accessible to LLMs?

Agentic UI Interaction

With a qualified set of well-defined actuation methods, web agents are able to close the gulf of execution quite well. HTML element types strongly afford a certain action (e.g., click a button, type to a field). Below is how an actuation schema to present the LLM backend with could look like:

interface ActuationSchema = {

thought: string;

action: "click"

| "scroll"

| "type";

cssSelector: string;

data?: string;

}[];

And a suggested actions response could, in turn, look as follows:

[

{

"thought": "Scroll newsletter cta into view",

"action": "scroll",

"cssSelector": "section#newsletter"

},

{

"thought": "Type email address to newsletter cta",

"action": "type",

"cssSelector": "section#newsletter > input",

"data": "user@example.org"

},

{

"thought": "Submit newsletter sign up",

"action": "click",

"cssSelector": "section#newsletter > button"

}

]

Function Calling and the Model Context Protocol represent two ends to outsource an explicit actuation model – server- and client-side, respectively.

Agentic UI Augmentation

An agent represents yet another feature to integrate with an application and its UI. Discoverability and availability, however, are among the most fundamental requirements of a web agent. Evidently, when a user experiences UI/UX friction, at least the agent should be interactive. That said, a scrolling modal web agent UI has been the go-to approach, that is, a little floating widget on top of the underlying application's UI. It comes with a major advantage: the agent application can be decoupled from the underlying, self-contained application.

How to Build a Web Agent?

Believe it or not: enhancing an existing web application with a purposeful agent is a lower-hanging fruit. The evolving agent ecosystem provides you with a spectrum of solutions: instantly use a pre-compiled agent, tweak a templated agent, or develop an agent from scratch. Either way, LLMs and web browsers exist for reuse, boiling down agent development to LLM context engineering, and UI augmentation.

Develop a Web Agent

Opting for a pre-compiled agent does not necessarily involve any actual development step. Instead, pre-compiled agents allow for high-level configuration through an agent-as-a-service provider's interface. Popular agent-as-a-service providers are, i.a., ElevenLabs, and Intercom. Serviced agents hide LLM communication and potentially interaction with a web browser behind the configuration interface.

Using a templated agent resembles the agent-as-a-service approach on a lower level. Openly sourced from a code repository, templated agents allow for any kind of development tweaks. Favourably, agent templates shortcut integration with LLM APIs and web browser APIs. Using a templated agent usually represents the preferable, best-of-both-worlds approach; common- and best-practice code snippets are available from the beginning, but everything can be customised as desired.

Of course, developing an agent from scratch is always an option. It is preferable whenever agent requirements deviate to a large extent from what exists in the service or template landscape.

Deploy a Web Agent

When web agent code lives side-by-side with the augmented application's code, agent deployment is covered by a generic pipeline. Something like: linting and formatting agent code, transpiling and bundling agent modules, testing agent, hosting agent bundle, and tiggering post deployment events. In that case, an agent represents a modular feature component in the application, no different than, for instance, a sign-up component.

Web agent source code right inside the application codebase comes at a cost:

- Agent developers can manipulate the source code of the underlying application.

- Agent functionality could introduce side effects on the underlying application.

- Agent changes require deployment of the entire application.

Best Practices of Agentic UX

When designing user experiences for agent-enhanced applications, there are a few things to consider:

-

Stream input and output to reduce latency

LLMs (re-)introduce noticeable communication round-trip time. To reduce wait time for the human user, stream chunks of data whenever they are available. -

Provide fine-grained feedback to bridge high-latency

Human attention is sensitive to several seconds of [system response time](https://www.nngroup.com/articles/response-times-3-important-limits/). Periodically provide agent _thoughts_ as feedback to perceptibly break down round-trip time. -

Always prompt the human user for consent to perform critical actions

Some actions in a web application lead to irreversible or significant changes of state. Never have the agent perform such actions on behalf of the user without explicitly asking for the permission.

Non-Invasive Web Agents with Webfuse

Webfuse is a configurable web proxy that lets you augment any web application. As pictured, web agents represent highly self-contained applications. Moreover, web agents and underlying applications communicate at runtime in the client. This does, in fact, render opportunities to bridge the above-mentioned drawbacks with Webfuse: Develop web agents with a sandbox extension methodology, and deploy them through the low-latency proxy layer. On demand, seamlessly serve users with your agent-enhanced website. Benefit from information hiding, safe code, and fewer deployments.

Deploy Web Agents with Webfuse

Develop or deploy web agents in minutes; serve agent-enhanced websites through an isolated application layer.

Next Steps

Ready to Get Started?

14-day free trial

Stay Updated

Related Articles

Snapshots: Provide LLMs with Website State

Comparison of the two prevalent techniques to compile web application snapshots, which represent key context for LLM-based AI agents for the web: DOM snapshots and GUI snapshots (screenshots).

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.