Frontier LLM capabilities have sparked an evolution of web AI agents. Provided with a website's current runtime state, an LLM could suggest what actions to take in the respective website in order to solve a certain task – such as “Book the next flight to Amsterdam” on skyscanner.com. First things first: a website's current runtime state is quite an unwieldy term. We prefer the metaphor of a snapshot. The intrusive question is, however: how to compile such a snapshot?

Want to get started with web agents? Read our gentle introduction to AI agents for the web.

A Matter of Serialisation

On data level, compiling a website snapshot represents a serialisation problem. Serialisation describes the process of converting dynamic state into a static, symbolic representation. Such a process is required whenever runtime state needs to be stored to disc, or transmitted across a network. The receiving end usually seeks to recreate the dynamic state from the serialised data. For example, JSON is a serialisation format for simple JavaScript objects:

{

"first": 1,

"second": 2

}

import operands from "operands.json";

const sum = operands.first + operands.second;

console.log(`${operands.first} + ${operands.second} = ${sum}`);

Recreating dynamic state from serialised data must, however, not necessarily correspond to the inversion of the serialisation procedure. Neither do we know exactly how LLMs interpret input, nor is there any universal web application runtime state language. That is, there is no evident snapshot representation to choose when developing web agents. To what degrees of effectiveness and efficiency a snapshot technique and artefact work remains subject to comparative evaluation.

GUI Snapshots: Glorified Screenshots

Evidently, screenshots resemble how humans perceive the graphical user interface (GUI) of a web application at a given point in time. With regard to LLM-based web agent context – for consistency reasons – we refer to a screenshot as a GUI snapshot.

Not only are GUI snapshots an evident type of snapshot artefact, but also subsidise LLM vision APIs image input. On the LLM backend side, image input is pre-processed so as to reduce data size significantly. As a result, images cost relatively few input tokens: A common full-page screenshot – five times a full HD viewport – costs as few as around four figures of tokens. Let's consider these costs a soft baseline for snapshot techniques and artefacts.

Grounded GUI Snapshots

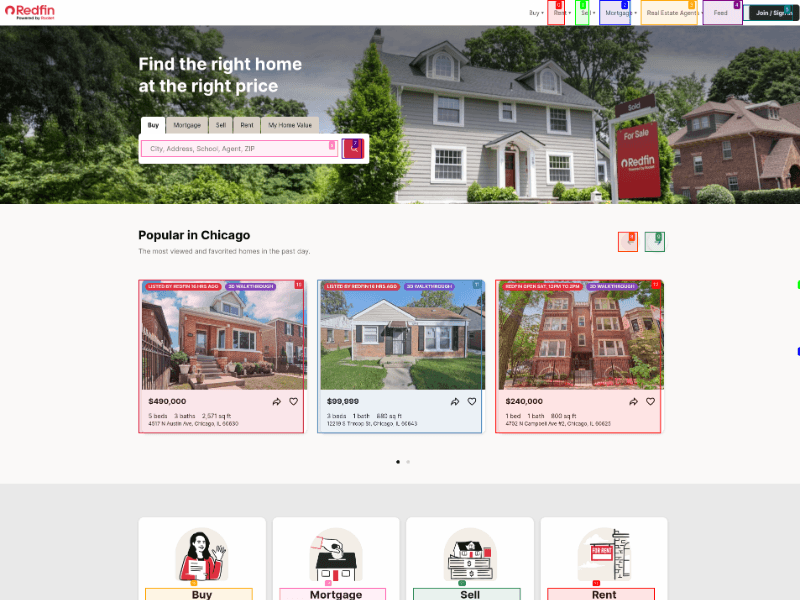

A downside of subsidiary pre-processing inherent to LLM vision APIs is that it irreversibly affects image dimensions. This fact disables pixel precision when suggesting action target elements. Grounding represents a measure of adding visual cues to a GUI (snapshot) that can be referred to by relative means. Browser Use, for instance, assigns to each visible interactive element in a page a unique numerical identifier. Before taking a screenshot, it adds coloured bounding boxes supplemented with the respective identifier to the GUI. The LLM can therein target elements by identifier, which allows relative backtracing.

A grounded GUI snapshot as produced by Browser Use.

DOM Snapshots: Runtime HTML

Humans might predominantly understand the web through GUIs. LLMs, on the other hand, excel at working with text input. The vast majority of training data has been representing natural and formal language. Research, in fact, supports LLMs' great success with describing and classifying HTML, and even navigating an inherent UI. That said, an HTML snapshot representation poses a promising alternative to GUI snapshots. The document object model (DOM), a web browser's uniform runtime state model of a web application, favourably is isomorphic to HTML. In its purest form, taking a DOM snapshot is as easy as reading document.documentElement.outerHTML. Elements in the DOM can be targeted by relative means, such as via CSS selectors.

Roughly speaking, four characters/bytes of text render one estimated LLM input token1. Token size is the prevalent downside of DOM snapshots: a real-world DOM easily serialises to millions of bytes, and thus hundreds of thousands of tokens. Using raw DOM snapshots is, economically speaking, not an option. State-of-the-art web agents therefore implement pre-processing techniques to reduce and subsidise DOM snapshots themselves on the client-side.

<section class="container" tabindex="3" required="true" type="example">

<div class="mx-auto" data-topic="products" required="false">

<h1>Our Pizza</h1>

<div>

<div class="shadow-lg">

<h2>Margherita</h2>

<p>

A simple classic: mozzarela, tomatoes and basil.

An everyday choice!

</p>

<button type="button">Add</button>

</div>

<div class="shadow-lg">

<h2>Capricciosa</h2>

<p>

A rich taste: mozzarella, ham, mushrooms, artichokes, and olives.

A true favourite!

</p>

<button type="button">Add</button>

</div>

</div>

</div>

</section>

Extracted DOM Snapshots

The most popular approach to somehow utilise DOM snapshots has been element extraction. Only a subset of elements in the DOM is selected and serialised. This can be done category-based (e.g., only buttons), scoring-based (e.g., element type + element text length + element attribute count), or LLM-based (e.g., prompt most relevant elements). Size can, moreover, be limited by capping at the top k elements. Despite extracted DOM snapshots greatly reduce in size, they lose much of the original hierarchy that possibly marks a strong inherent UI feature. An extracted DOM snapshot could look as follows:

<h1>Our Pizza</h1>

<h2>Margherita</h2>

<button type="button">Add</button>

<h2>Capricciosa</h2>

Downsampled DOM Snapshots

Downsampling is a fundamental signal processing technique that consolidates local data points in order to reduce the overall data size. We recently transferred this technique to DOMs, and proposed a first-of-its-kind algorithm to downsample DOMs. DOM downsampling consolidates DOM nodes under the assumption that a majority of DOM (HTML) inherent UI features are retained. You can think of it as image compression, just for HTML. Here's an example of a downsampled DOM:

<section type="example" class="container">

# Our Pizza

<div class="shadow-lg">

## Margherita

A simple classic: mozzarela, tomatoes, and basil.

<button type="button">Add</button>

## Capricciosa

A rich taste: mozzarella, ham, mushrooms, artichokes, and olives.

<button type="button">Add</button>

</div>

</section>

Interested in how it works under the hood? Dive deep with DOM downsampling for LLM-based web agents.

DOM downsampling is available on the Webfuse Automation API.

GUI Snapshots vs DOM Snapshots

| GUI Snapshots | DOM Snapshots (downsampled) | |

|---|---|---|

| Data size | high | medium |

| Token size | low | high (medium - low) |

| Actuation | absolute | relative |

| In-memory | no | yes |

| Ready Event | load | DOMContentLoaded |

Footnotes

Next Steps

Ready to Get Started?

14-day free trial

Stay Updated

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.