Voice agents are becoming a more common part of how users interact with websites. They provide a conversational interface that can guide users, answer questions, and perform tasks, moving interactions beyond traditional clicks and typed commands. The goal is an AI that can talk with a user and assist them directly on the page they are viewing.

However, transforming a conversational bot into a capable agent that can reliably act upon a live, dynamic web application introduces a series of technical challenges. When a voice agent is implemented by directly injecting its script into a website, its effectiveness is often limited by the very nature of the modern web. The agent’s ability to perform actions, maintain context, and operate securely is frequently compromised.

This article breaks down the specific technical problems that developers face with this direct injection method. We will examine the issues that prevent a standard voice agent from delivering a consistent and genuinely helpful user experience, including:

- The unreliability of actions based on direct DOM manipulation.

- The constant loss of conversational state during page navigations.

- Browser security policies that block cross-domain control and iframe access.

- The agent's difficulty in accurately perceiving complex page content.

- Gaps in deployment, security, and the ability to audit sessions.

By analyzing these challenges, we can establish the requirements for a more suitable architecture—one that allows a voice agent to operate effectively on any website.

Why Direct DOM Manipulation Creates Unreliable Agents

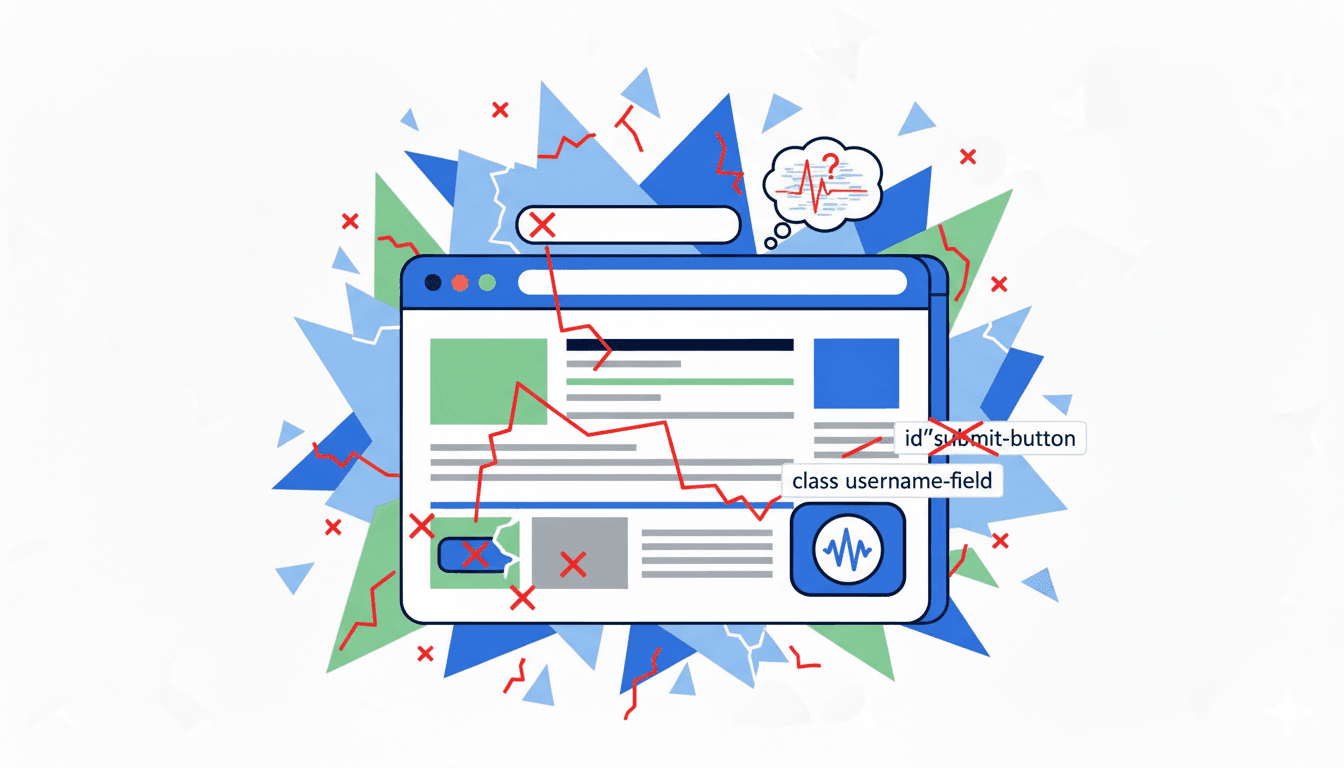

When a voice agent is injected directly into a website, its primary method for taking action is through direct manipulation of the Document Object Model (DOM). The process is straightforward in theory: the agent’s script uses JavaScript to find specific HTML elements on the page and then programmatically triggers events like clicks or keyboard inputs. To locate these elements, the agent relies on CSS selectors—unique identifiers like id="submit-button" or class="username-field".

This approach, however, is the source of major reliability issues. The agent's ability to act is tied directly to a static map of the website's structure, but modern websites are anything but static.

The Fragility of CSS Selectors

A website's user interface is in a constant state of flux. Developers continuously update layouts, redesign components, run A/B tests, or refactor code. These changes, no matter how small, often alter the underlying HTML structure.

- Structural Changes: A button might be moved inside a new

<div>, or a form field'sidmight be updated for better clarity. - Styling Updates: A class name used for styling, like

.btn-primary, could be changed to.btn-submit, breaking any selector that relied on the old name. - Framework-Generated IDs: Some web frameworks automatically generate dynamic, non-human-readable IDs and class names that can change with every new build of the application.

When these changes occur, the CSS selectors hardcoded into the agent's logic become invalid. The agent attempts to find an element that no longer exists at the expected location, causing the action to fail. This brittleness means the agent requires constant maintenance to keep pace with website updates, and any unmonitored change can disable its functionality without warning.

The Problem of Dynamic Content

Modern web applications rarely load all their content at once. To improve performance, content is often loaded asynchronously with JavaScript after the initial page has been rendered. This includes product listings on an e-commerce site, user data in a dashboard, or search results that appear after a query is submitted.

This creates a timing problem, or a "race condition," for a directly injected agent. The agent’s script might try to interact with an element before it has been created and added to the DOM. For example, it might try to click a "Buy Now" button that has not yet loaded. The result is another failure. While developers can attempt to build workarounds using timers or mutation observers to wait for elements to appear, this adds another layer of complexity and creates a solution that is just as likely to break when the application's loading behavior changes.

The Invisibility of Encapsulated Components

Many modern web frameworks use the Shadow DOM to encapsulate components, keeping their internal structure separate from the main page's DOM. This is a common practice for building complex UI elements like date pickers, custom form controls, or product configurators. For a standard script injected into the page, however, elements inside a shadow root are invisible and inaccessible. The agent cannot "see into" these components to find buttons or input fields, making it impossible to interact with major parts of the user interface.

The Challenge of Synthetic Events

Even when an agent successfully locates an element, the action itself can fail. Programmatically triggered events (like a script calling element.click()) are not identical to events generated by a real user. Many web applications have logic that can detect this difference. This can lead to compatibility issues where the website's own scripts do not respond to the synthetic event, or it can trigger security measures designed to block automated bots. The agent’s action is either ignored or actively blocked, creating another point of failure.

Maintaining Conversational State Across Page Navigations

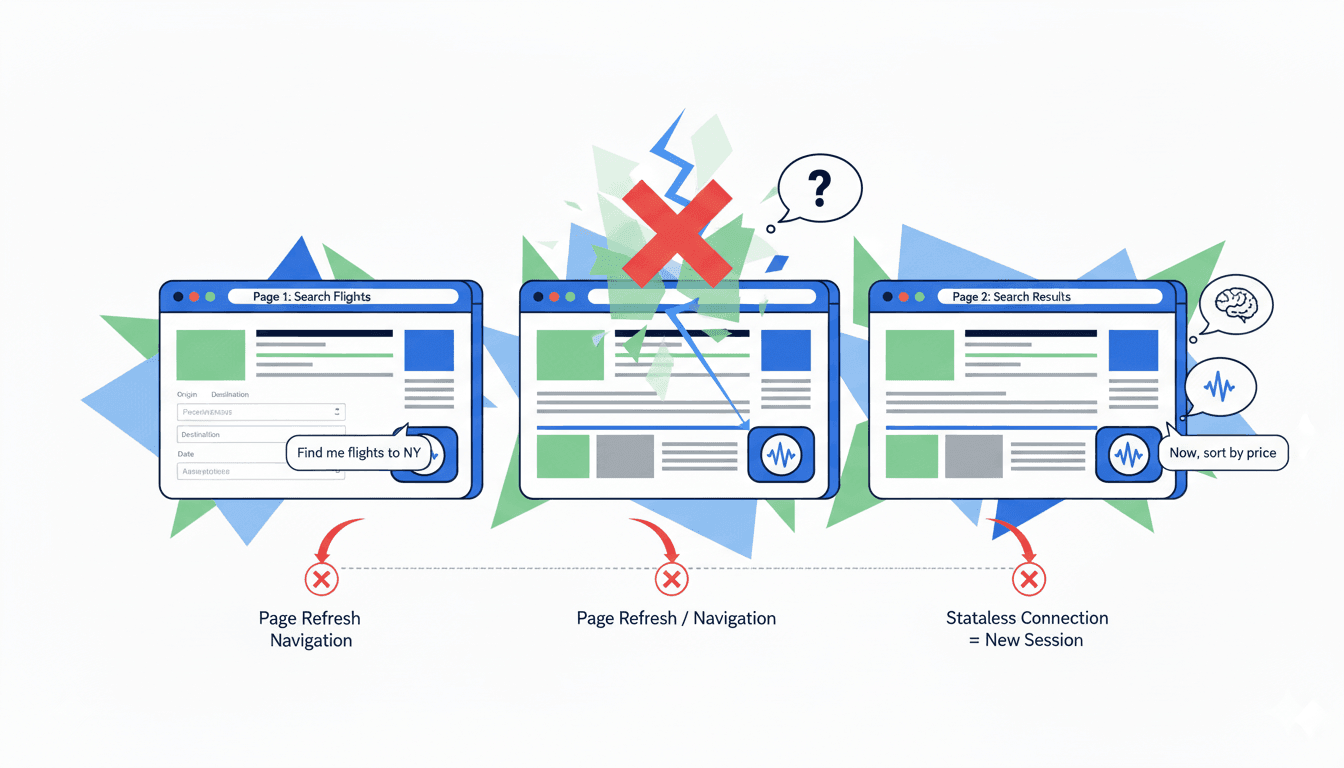

A major challenge for a voice agent implemented via direct script injection is its inability to maintain a continuous conversation as a user navigates a website. The standard behavior of a web browser is to treat each page load as a separate, isolated event. This model is fundamentally at odds with the requirements of a persistent, stateful AI assistant.

When a user follows a link to a new page or submits a form that causes a page refresh, the browser discards the current page in its entirety. This includes all the HTML, CSS, and any JavaScript that was running. The voice agent’s script, being part of that page, is terminated along with everything else.

The Impact of a Stateless Environment

This page-by-page lifecycle leads to a complete breakdown of the user experience for any task that spans more than a single page.

- Abrupt End to Conversations: The moment a user navigates away, the agent's memory is wiped. The ongoing conversation is cut off, and any context that had been established is lost.

- Forced Repetition: On the new page, the agent's script must reload from scratch. The user is forced to re-initiate the conversation and repeat their request. The agent has no recollection of the previous interaction.

- Inability to Handle Multi-Step Tasks: This limitation makes it impossible for the agent to assist with complex workflows. Consider a user booking a flight:

- On the homepage, the user says, "Find me a flight to New York." The agent helps fill out the form.

- The user is taken to a search results page.

- The user then says, "Now, sort the results by price."

A directly injected agent on the results page would have no memory of the original destination request. It cannot connect the new command to the previous context.

Why Custom Workarounds Fall Short

To solve this, developers might attempt to build a custom system to preserve state across navigations. This typically requires creating a separate orchestration service that uses session cookies to identify the user and a backend to store the conversation history. The idea is to save the state before the page unloads and restore it when the new page loads.

However, this approach is more of a patch than a solution and introduces its own problems:

- Engineering Overhead: Building and maintaining a reliable state-tracking service is a complex project in itself, adding another moving part that can fail.

- Unreliable State Saving: Capturing the full state of the agent in the moments before a page is destroyed is difficult. Browser events like

beforeunloaddo not provide a guaranteed window for these operations to complete successfully. - Noticeable Disruption: The process of loading a new page, running the agent script, fetching the saved state from a service, and re-rendering the conversation UI is not instantaneous. This results in a disjointed user experience with visible delays and content "flashing" as the state is pieced back together.

The core issue remains: the agent's existence is tied to the lifecycle of an individual page. A truly interactive assistant requires a persistent operational layer that exists independently of page loads. Direct script injection cannot provide this, making it unsuitable for building agents that can guide users through a complete journey on a website.

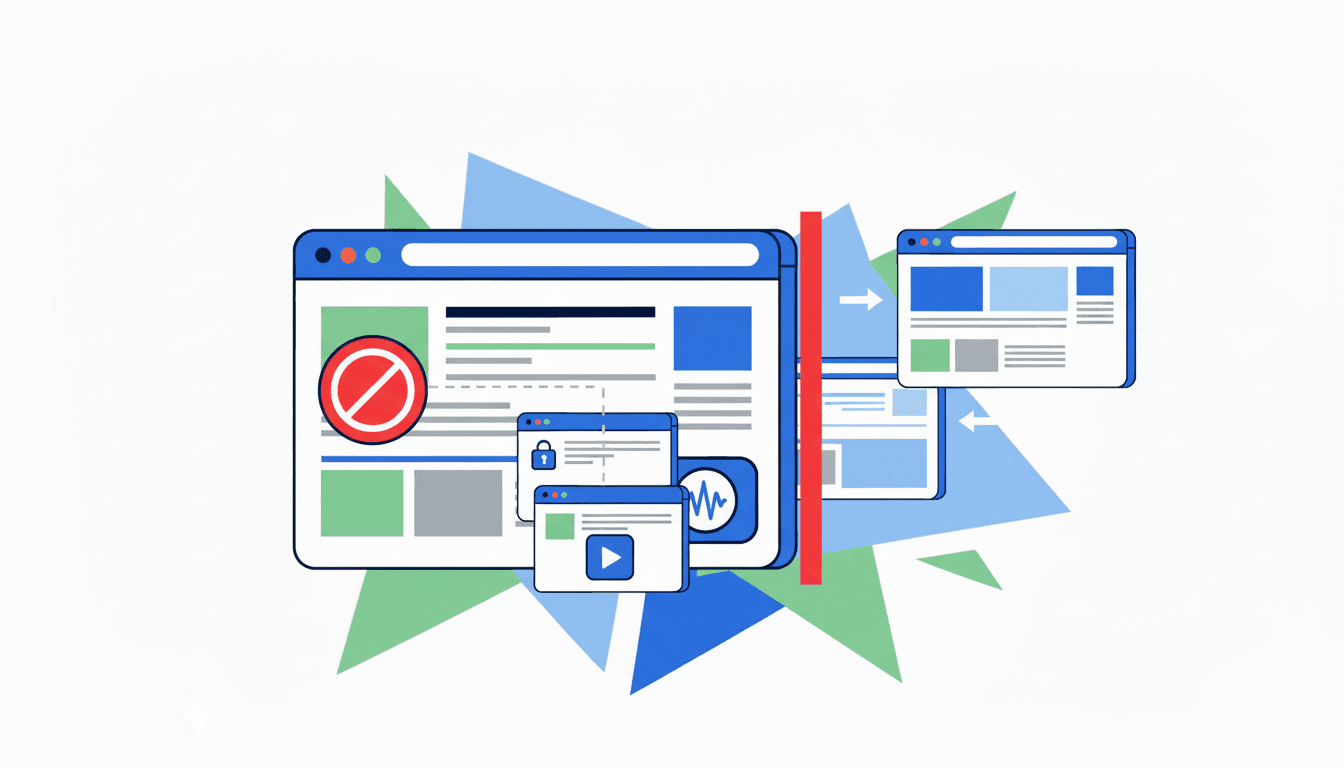

Navigating Cross-Domain Restrictions and Iframes

A core security principle of the web, the Same-Origin Policy, prevents a script from one website from accessing or manipulating content on another. This policy is a major safeguard, stopping malicious sites from stealing data from other tabs you may have open. However, for a voice agent implemented via direct script injection, this security measure creates an impassable barrier, severely limiting its operational range.

The agent's script is bound to the domain where it was initially loaded. This confinement results in two major operational failures when a user's task involves more than one domain.

The Wall Between Websites

Modern web workflows are rarely self-contained. A user journey frequently involves moving between different, yet related, websites. Consider these common scenarios:

- An e-commerce transaction that redirects the user from the merchant's site to a third-party payment processor like PayPal.

- A travel booking process that sends the user to a partner airline's website to select seats.

- A single sign-on (SSO) flow that redirects to an identity provider like Okta or Google for authentication.

In each of these cases, a directly injected voice agent's journey comes to an abrupt halt. The moment the user is redirected to the new domain, the agent, whose code lives on the original site, cannot follow. It is left behind. The user loses their assistant at what is often the most complex part of the process, completely breaking the continuity of the guided experience.

Black Holes on the Page: The Iframe Problem

The same security restrictions that block access to third-party sites also apply to content embedded within <iframe> elements. Iframes are a common way to display content from another domain directly on a page without forcing a full redirect. This is used for many essential web components:

- Payment Forms: Secure credit card input fields from services like Stripe or Braintree.

- Customer Support Portals: Embedded chat widgets or help centers from platforms like Intercom or Zendesk.

- Media Players: Videos from YouTube or Vimeo.

- Embedded Widgets: Cookie consent banners, A/B testing tools, or analytics solutions often loaded through Google Tag Manager.

From the perspective of the voice agent running on the main page, the content inside a cross-domain iframe is an opaque box. The Same-Origin Policy denies the agent's script any ability to "see" inside the iframe. It cannot read the text, identify the buttons, or access the form fields contained within it. This creates a functional blind spot on the page. The agent is powerless to help a user fill out their payment details, interact with a support agent, or even accept a cookie policy to clear the screen.

These cross-domain limitations mean that a directly injected agent is effectively trapped within a single, isolated garden. It cannot navigate the interconnected ecosystem of the modern web, rendering it incapable of assisting with the integrated, multi-service workflows that users engage in every day.

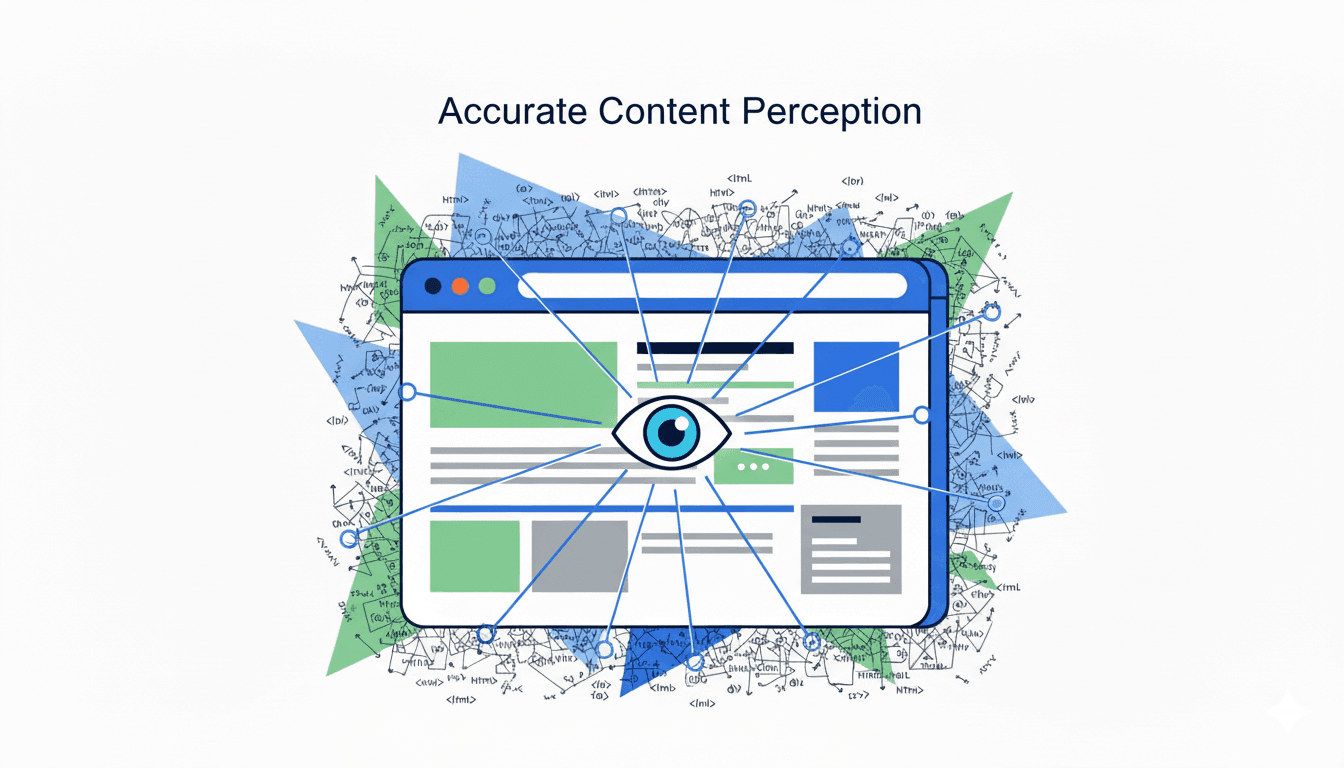

Enabling Agents to Accurately Perceive Web Content

For a voice agent to provide relevant assistance, it must first understand the page the user is viewing. A human user perceives a website visually—they see headlines, buttons, and images arranged in a clear layout. An AI agent, however, perceives the site by processing its underlying code, the Document Object Model (DOM).

When an agent is implemented with a standard script, it has direct access to this DOM. However, the raw DOM of a modern web application is a poor source of information. It is often a complex and noisy environment that makes it very difficult for an AI to extract meaningful context.

The Challenge of DOM Complexity and Noise

The HTML structure of a contemporary website is rarely a clean representation of its content. It is typically cluttered with code that serves purposes other than displaying information, creating major problems for an AI trying to make sense of it.

- Structural Overhead: Websites are built with deeply nested

<div>elements used for styling and layout, creating a complex tree structure that obscures the actual content. - Framework-Generated Code: Front-end frameworks like React or Angular often generate non-semantic class names and complex component structures that are difficult for an AI to interpret.

- Third-Party Scripts and Hidden Elements: The DOM is also filled with tracking pixels, ad-related scripts, and elements that are hidden from the user but still present in the code.

This complexity presents two issues for an AI agent:

- Information Overload: The sheer volume of raw HTML can easily exceed the context window of the large language model powering the agent. Feeding it thousands of lines of code makes it slow and expensive to process.

- Signal vs. Noise: The agent struggles to distinguish between important content (like a product description or a form field) and irrelevant structural code or hidden tracking elements.

Blindness to Technical and Visual Context

The raw DOM also fails to capture the visual context and interactive state of a webpage, which are essential for providing accurate assistance. This is made worse by the technical barriers discussed earlier.

- No Visual Hierarchy: The DOM is a structural tree, not a visual one. It does not tell the agent what is visually prominent on the page. A large, important headline and a small piece of text in the footer might appear similar in the HTML structure.

- Lack of State Awareness: The agent is unaware of the page's interactive state. It cannot tell if a modal pop-up is currently covering the screen, if a dropdown menu is open, or if a specific tab in a component is active. This can cause it to attempt actions on elements that are not currently visible or interactive.

- Invisibility of Encapsulated and Embedded Content: The agent’s perception is further compromised because it cannot read content inside cross-domain iframes or UI components built with Shadow DOM. This means large, interactive parts of the page are not just hard to interpret; they are entirely missing from the agent's understanding of the page.

- Invisibility of Canvas Content: Some web content, like interactive charts or product configurators, is rendered inside a

<canvas>element. The DOM provides no information about what is drawn inside a canvas, making this content completely invisible to the agent.

Without a specialized mechanism to process, clean, and structure this information, a directly injected agent operates with poor eyesight. It is given a massive, noisy blueprint of the page and is left to guess what is important, what is visible, and what is actionable. This limitation severely restricts its ability to make reliable decisions and provide genuinely helpful guidance.

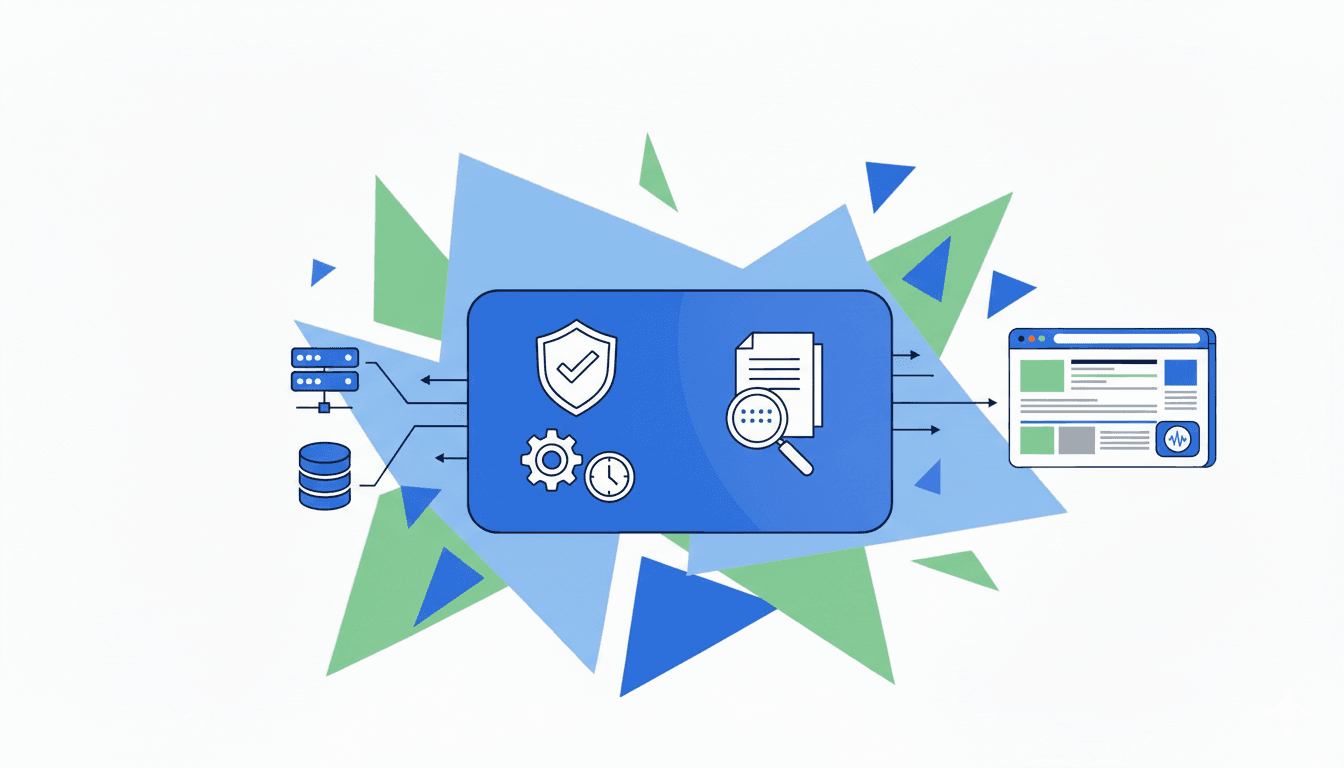

Addressing Deployment, Security, and Auditing Gaps

The technical challenges of a directly injected voice agent extend beyond its in-session performance. The entire lifecycle of deploying, managing, and securing the agent presents a separate set of operational problems. When the agent is just another script within a website's codebase, it inherits all the complexities of standard software development and lacks the specialized features needed for a secure, enterprise-ready solution.

Deployment and Maintenance Complexity

A direct implementation model tightly couples the voice agent to the host website's source code. This creates a rigid and inefficient management process.

- Requires Code Changes: To install, update, or reconfigure the agent, a developer must directly edit the website's files. This is not a simple task; it involves developer resources, code reviews, and adherence to the website’s release schedule.

- Slow Update Cycles: This dependency on development cycles means that a simple change—like updating the agent's system prompt or adding a new tool—can take days or weeks to go live. There is no agility to quickly test new configurations or respond to an issue.

- Organizational Separation: Often, the team developing the agent is separate from the team managing the website and may not have direct access to the application's source code. This forces a rigid development process where agent updates are dependent on another team’s priorities and release schedule.

- Lack of Support for Unmodifiable Applications: This model fails entirely with applications that cannot be changed, such as legacy systems no longer under active development or third-party vendor platforms (e.g., payment processors, e-signature providers). It is not possible to inject a script into an application you do not control.

Data Security and Compliance Risks

When a script runs on a webpage, it has access to any information displayed on that page. This creates a major security concern for a voice agent, which might be active on pages containing sensitive data.

- Uncontrolled Data Exposure: The agent can access personally identifiable information (PII), financial details, or protected health information (PHI) that is visible on the screen.

- No Built-in Masking: Without a separate architectural layer to control data flow, there is no easy way to redact or mask this sensitive information before it is processed by the agent’s AI model or captured in a session recording. This creates a sizeable risk for compliance with regulations like GDPR, HIPAA, and PCI DSS. The organization becomes responsible for any sensitive data the AI is exposed to.

The Absence of Collaborative and Auditing Features

A standard voice agent script offers an experience for a single user and lacks the necessary framework for oversight or human assistance.

- No Human Escalation Path: The system has no native capabilities for co-browsing or shared control, which would allow a human agent to join the session, see the user's screen, and take over to provide direct assistance. When the AI reaches its limits, the only option is to end the session and direct the user to a separate support channel, creating a frustrating experience.

- No Automatic Audit Trails: A direct implementation provides no built-in mechanism for generating a detailed log of the agent's actions and the user's interactions. This makes it very difficult to review a session to debug an issue, understand what happened, or verify compliance.

- No Session Recording: There is no easy way to create a pixel-perfect video recording of the session. Recordings are important for quality assurance, training human agents, and providing a definitive record of the interaction.

Without these features, managers and compliance officers have no visibility into how the agent is performing or what is occurring in user sessions, making it difficult to ensure quality or investigate a security incident.

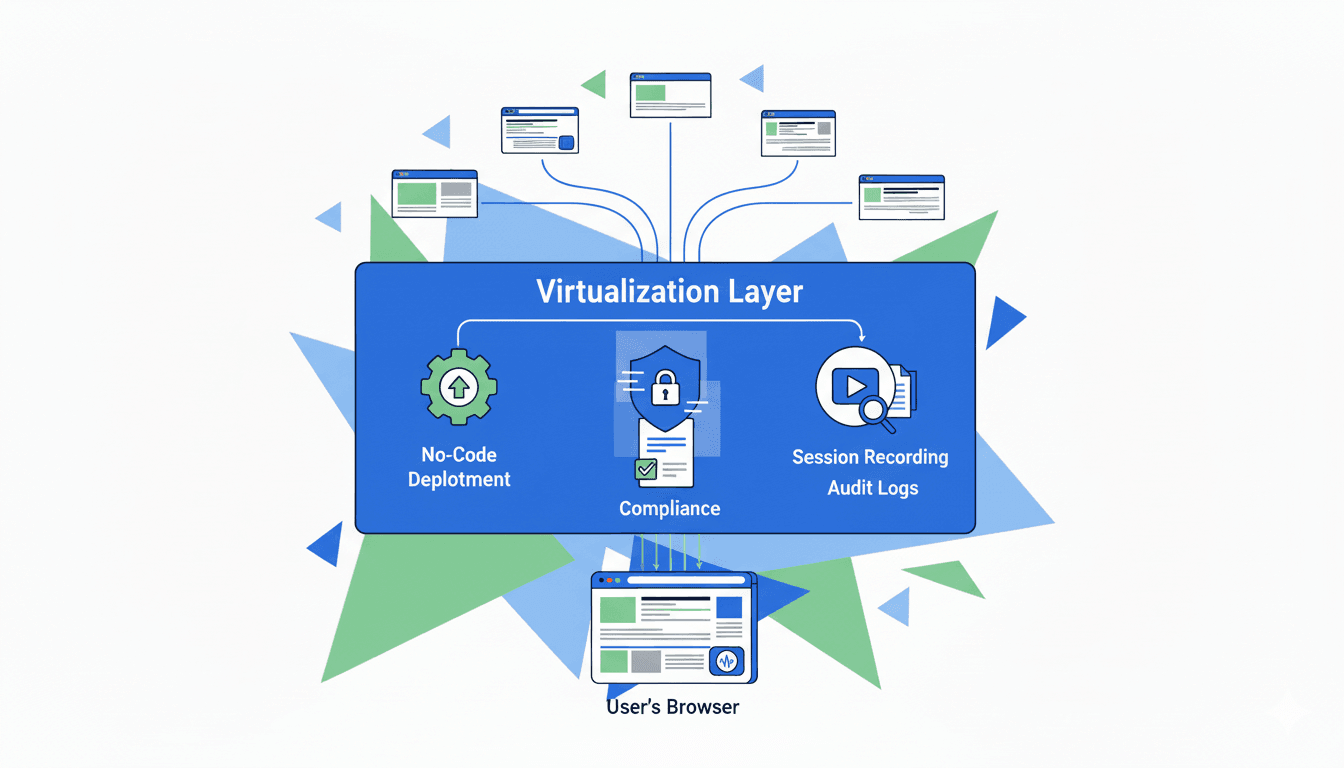

Shifting from Direct Injection to a Virtualization Layer

The challenges outlined—unreliable actions, lost conversational state, cross-domain restrictions, poor perception, and operational gaps—all stem from a single architectural choice: embedding the voice agent directly into the target website. This approach forces the agent to operate within the constraints of a standard browser environment, which was never designed for this kind of persistent, interactive application. The limitations are not just individual problems to be patched; they are symptoms of a foundational mismatch between the goal and the method.

To build a reliable and capable voice agent, a different architectural model is required. The solution is not to create a more complex script but to change the very environment in which the agent operates. This is accomplished by moving from direct injection to a virtualization layer.

The Concept of a Web Virtualization Layer

A virtualization layer is an intermediary system that sits between the user's browser and the target web application. Instead of the user accessing the website directly, they connect to a platform that, in turn, manages the interaction with the site on their behalf. This platform creates a secure, controlled sandbox around the original website, effectively wrapping it in an interactive overlay.

This is achieved through a proxy-based system. When a user initiates a session, the platform's proxy fetches the website's content (HTML, CSS, JavaScript) and dynamically modifies it in real-time before sending it to the user's browser. This on-the-fly transformation allows the platform to:

- Establish a persistent session environment that exists independently of any single page load.

- Rewrite URLs and modify security headers to bring multiple websites and iframes into a single, unified context.

- Inject a specialized set of high-level tools and APIs that give the agent reliable methods for perception and action.

How Virtualization Addresses the Core Problems

By changing the operational environment, a virtualization layer provides direct solutions to the problems inherent in the direct injection model.

- Reliability: The agent no longer relies on fragile CSS selectors. It uses a stable, high-level API to interact with the page, abstracting away the complexities of the underlying DOM, including the Shadow DOM.

- State Persistence: The agent runs within the persistent virtualization layer, not on the individual page. As the user navigates, the agent and its conversational context remain active and unbroken.

- Cross-Domain Control: The proxy brings all navigated websites, including third-party services and iframes, into the same virtualized session. The Same-Origin Policy is no longer a barrier because, from the browser's perspective, all content is being served from the platform's single, trusted domain.

- Accurate Perception: The platform provides tools that process the raw DOM into a clean, structured format optimized for an AI, solving the problem of information overload and lack of visual context.

- Centralized Management: Deployment, security, and auditing are handled by the platform. Updates can be rolled out instantly without code changes, data can be masked before it reaches the agent, and detailed session logs can be generated automatically.

This architectural shift moves the agent from being a temporary guest on a webpage to a permanent resident of a controlled, purpose-built environment. It is this virtualization approach that provides the foundation for building a truly effective and enterprise-ready voice AI.

Solving Agent Reliability with a Web Augmentation Platform

The limitations of a directly injected voice agent are not solvable by simply improving the agent's script. The core issue lies in asking the agent to perform a complex, stateful job within a stateless and restrictive environment. The solution, therefore, is not to force the agent to adapt to the web's constraints, but to provide a new operational layer that removes those constraints entirely. This is the function of a web augmentation platform.

A web augmentation platform, like Webfuse, operates on the principle of virtualization. Instead of placing the agent's code directly onto the target website, the platform wraps the entire user experience in a Virtual Web Session. This session is a controlled, persistent environment managed by the platform's proxy, which gives the agent the stable foundation it needs to operate reliably.

The following table summarizes the architectural differences and their impact on performance:

| Challenge | Direct Injection Method | Webfuse |

|---|---|---|

| Action Reliability | Relies on fragile CSS selectors that break with website updates. Cannot interact with Shadow DOM or generate user-like events. | Uses a stable Automation API. Actions are resilient to UI changes and can reliably interact with all page elements, including the Shadow DOM. |

| Session Continuity | Agent script is terminated on each page load, losing all context. Requires complex, unreliable workarounds. | The agent operates in a persistent virtualization layer, independent of page loads. The conversation remains continuous throughout the user's entire journey. |

| Cross-Domain Control | Blocked by the browser's Same-Origin Policy. Cannot access or control content in third-party sites or embedded iframes. | Unifies all domains and iframes into a single session. The agent can operate across any website the user visits. |

| Content Perception | Processes a raw, noisy DOM, leading to information overload and inaccurate context. Blind to iframe and Shadow DOM content. | Provides specialized tools that transform the DOM into a clean, structured format for the AI, ensuring accurate perception. |

| Deployment & Security | Requires code changes for updates, tied to website release cycles. Lacks built-in data masking or comprehensive auditing. | Centralized, no-code deployment. Includes built-in security features like data masking and provides automatic session recording and audit logs. |

By shifting from direct injection to a virtualization platform, the voice agent is transformed from an unreliable script into an integrated, enterprise-ready assistant. This architecture provides the stability and security that allows the agent to perceive, act, and persist across the entire web, finally enabling the creation of a genuinely helpful and reliable automated experience.

Take the Next Step

The challenges of building reliable voice agents are significant, but they are solvable with the right architecture. A web augmentation platform provides the persistent, secure, and capable environment needed to move beyond fragile scripts and create genuinely helpful user experiences.

If you are ready to build AI agents that can navigate the entire web, handle complex tasks, and operate securely, our team can help you design the right solution.

- Talk to an Expert: Contact us to discuss your specific use case and learn how a virtualization layer can address your agent reliability challenges.

- Explore Use Cases: Learn more about how Webfuse is used to create automated journeys across different websites.

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.