Over the previous articles, we have explored the architecture and capabilities required to build a client side, session-aware Voice AI agent. In Part 1, we examined why client-side tool calling is the best approach. Part 2 detailed the Virtual Web Session and Session Extension architecture, and Part 3 covered the specific perception and action tools that give an agent its digital "eyes and hands."

Now, it is time to put that theory into practice. This guide will provide a hands-on, step-by-step walkthrough of the entire process, from configuring a new voice agent in ElevenLabs to deploying it as a Webfuse Extension that can take control of a live website. By the end of this tutorial, you will have a functional prototype that can understand spoken commands, perceive the content of a webpage, and execute actions like clicking buttons and filling in forms.

What You Will Need (Prerequisites):

Before we begin, please ensure you have the following:

- An ElevenLabs Account: You will need access to the ElevenLabs platform to create and configure the voice agent.

- A Webfuse Account: A Webfuse account is required to create a SPACE and deploy the Session Extension.

- A Code Editor: Any text editor will work, but a code editor like Visual Studio Code is recommended.

- Basic Knowledge of HTML and JavaScript: You do not need to be an expert, but a fundamental understanding will be helpful as we assemble the extension files. All necessary code will be provided.

The Process: A High-Level Overview

We will build our agent in three main stages:

- Configuring the Agent in ElevenLabs: First, we will create a new voice agent, define its personality and goals with a system prompt, and configure the specific client-side tools it will use to interact with the webpage.

- Building the Webfuse Session Extension: Next, we will create the necessary files (

manifest.json,popup.html, and JavaScript files) that will house the ElevenLabs agent and contain the logic to connect its tools to the Webfuse Automation API. - Deploying and Testing: Finally, we will create a Webfuse SPACE, upload our completed extension, and test our agent's ability to control a live website.

Step 1: Configuring the Agent in the ElevenLabs Voice Lab

Our first task is to create the "brain" of our operation. Before the agent can control a website, we must define its identity, its purpose, and the specific set of tools it has permission to use. This is all done within the ElevenLabs platform. This configuration informs the language model about its capabilities, ensuring it knows when and how to call upon the perception and action tools we will build later.

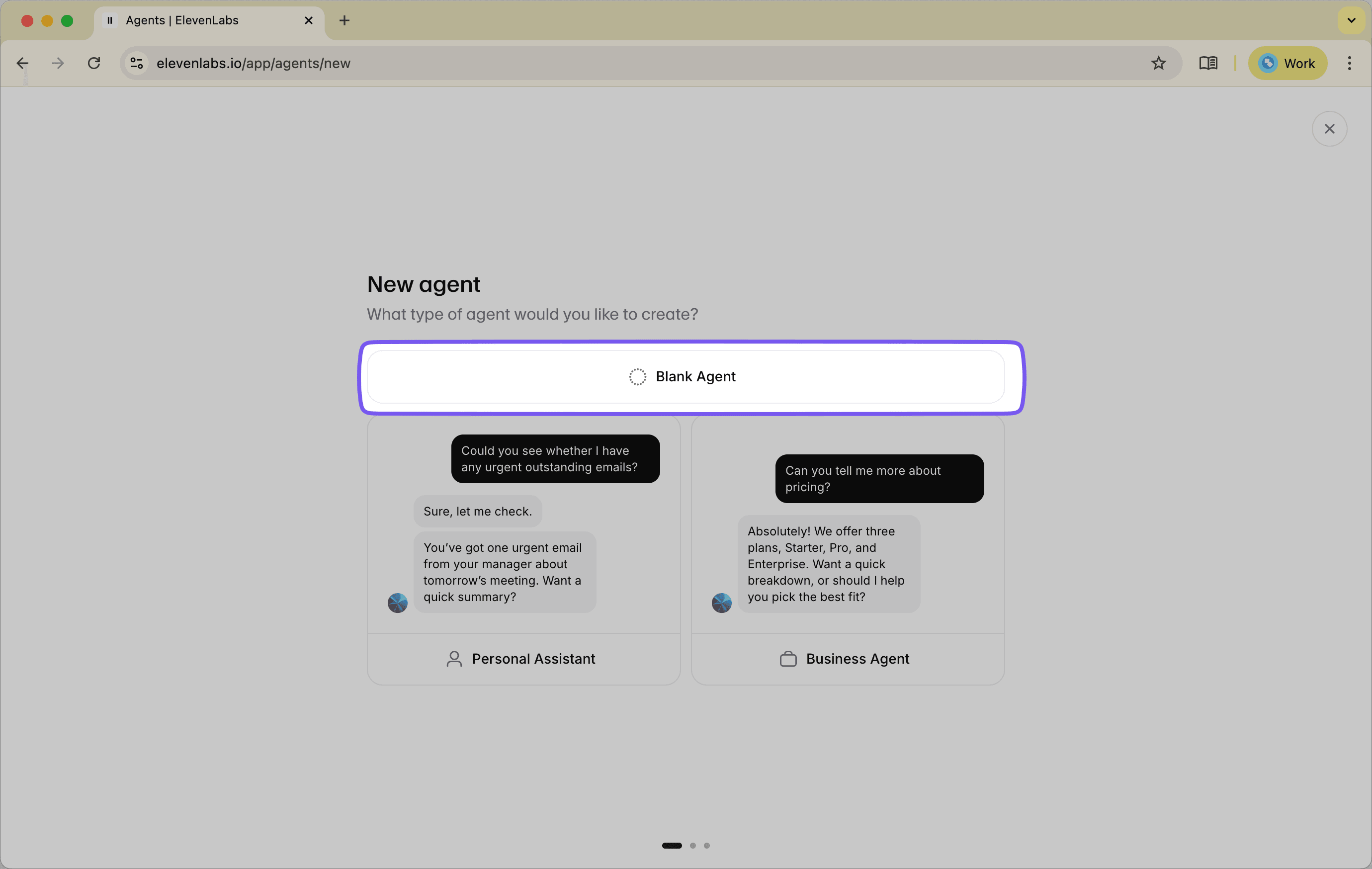

1.1. Create a New Agent

First, log in to your ElevenLabs account and navigate to the Agents Platform from the main dashboard. Here, you can manage your synthetic voices and AI agents. Select the option to create a new agent and choose "Blank Agent". This will open the main configuration interface where you will define every aspect of your agent, from its voice to its core instructions.

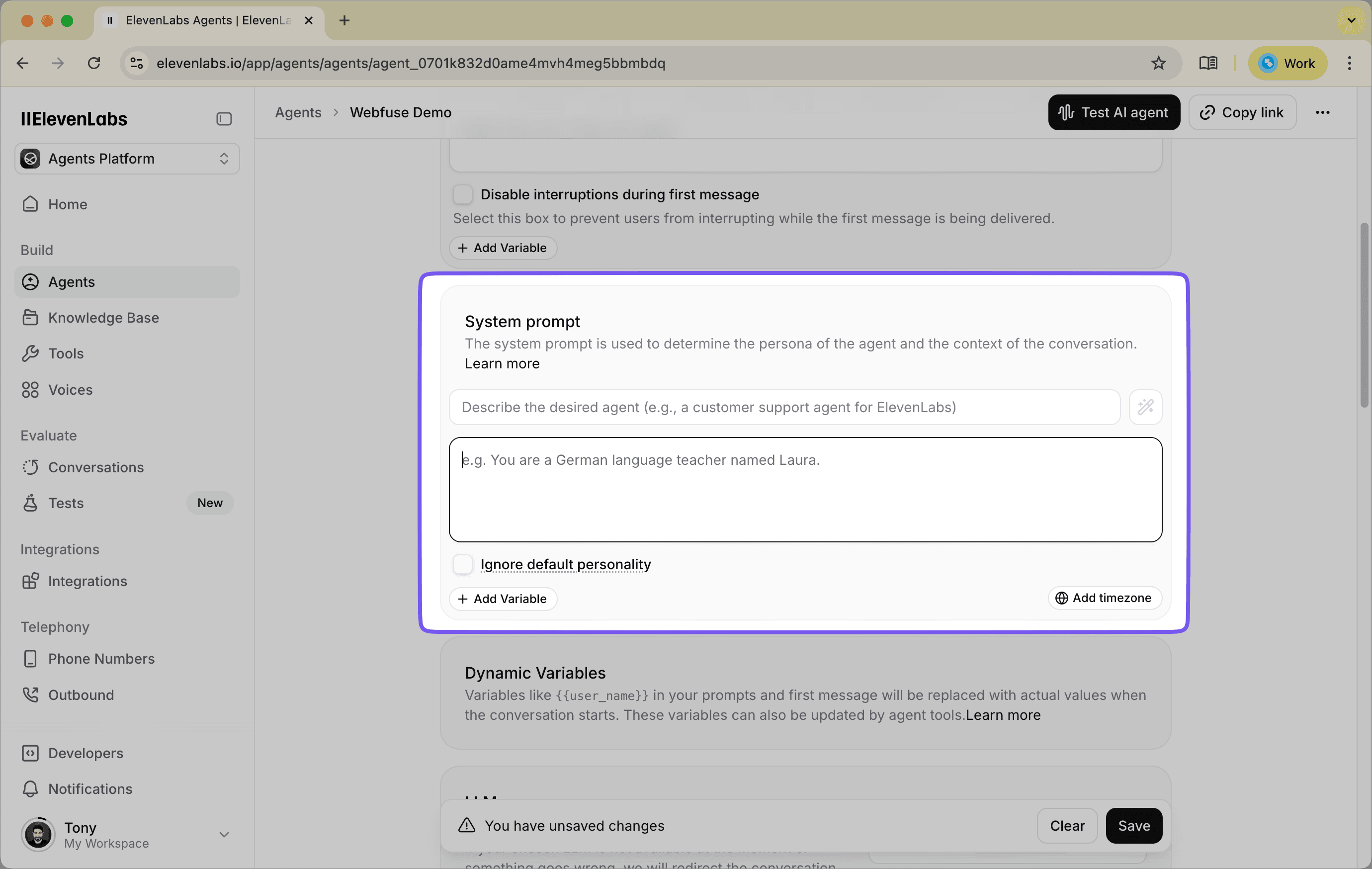

1.2. Set the System Prompt and Instructions

In the "Instructions" field, you will provide the agent's system prompt. This sets the agent's personality, goals, and its awareness of the tools it can use. A well-crafted prompt guides the agent to use its abilities effectively.

For our purpose, the prompt needs to tell the agent that it is an on-page assistant designed to interact with the current website. It also explicitly lists the tools it can use.

- Copy and paste the following prompt into the "Instructions" section:

You are a helpful on-page assistant.

Your goal is to help the user navigate and interact with the website they are currently viewing.

You have a set of tools to see the page content and perform actions like clicking and typing.

When a user asks you to do something on the page, use your tools to accomplish the task.

# Tools

`take_dom_snapshot`: You'll obtain the current HTML structure to understand what the user is seeing.

`left_click`: Click on a certain element on the page (focus an input field, activate a button).

`type`: Put certain TEXT input targeting an element, allowing you to fill forms.

`relocate`: Change the current webpage to a URL you specify.

The current page you are on should be available from the DOM snapshot below:

{{dom_snapshot}}

Note the {{dom_snapshot}} placeholder at the end. This is a special variable that creates a feedback loop. When the agent uses the take_dom_snapshot tool, the result will be injected back into this prompt for its next turn, giving it an updated "view" of the page.

ElevenLabs will prompt you to provide a placeholder value for the {{dom_snapshot}} dynamic variable. This value is only used for testing purposes within the ElevenLabs interface. You can enter any test value (e.g., "test" or "placeholder") to enable the save button. The actual DOM snapshot data will be populated automatically when the agent runs in your Webfuse session.

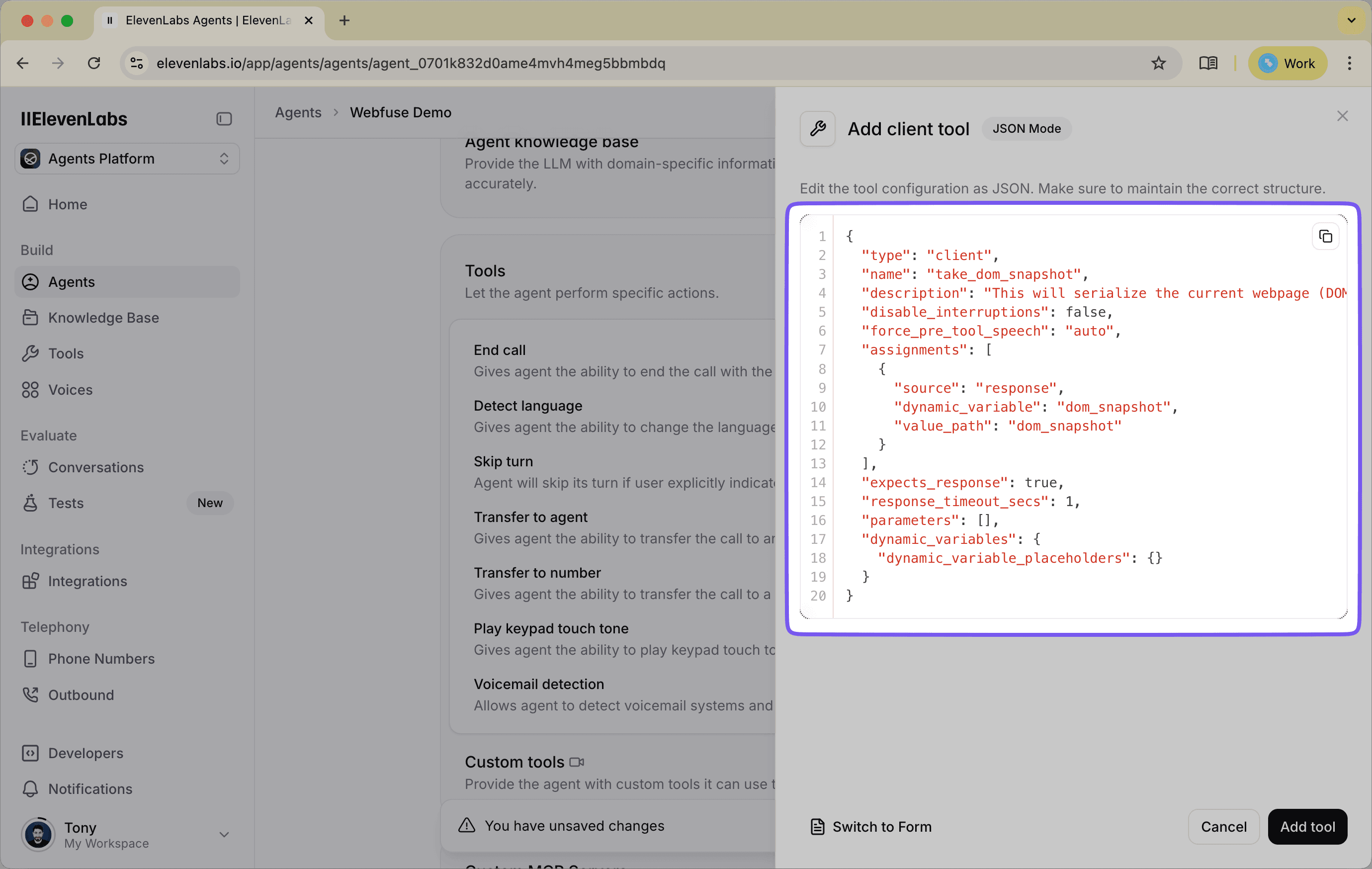

1.3. Define the Client-Side Tools

Next, scroll down to the "Tools" section. Here, we will declare each of the tools mentioned in the prompt. For our use case, these will be "Client" tools, which tells ElevenLabs that the code for these functions will be executed on the client-side (in our case, by our Webfuse Extension).

For each tool below, click "Add Tool," select "Client" as the type, and then click the "Edit as JSON" button. Paste the corresponding JSON definition into the editor.

- Perception Tool:

take_dom_snapshotThis tool gives the agent its "eyes." The description informs the model that this is how it can understand the content of the page.

{

"type": "client",

"name": "take_dom_snapshot",

"description": "This will serialize the current webpage (DOM) as a string. The output is a more succinct version that while keeping the original structure of the page will reduce the total size. It is a combination of hierarchical nodes and markdown format that represent the original HTML structure. \n\nFor example, when a user wants to understand the content of the page, you can take a DOM snapshot and then will be able to summarize the content or take further actions such as navigating over the website. ",

"disable_interruptions": false,

"force_pre_tool_speech": "auto",

"assignments": [

{

"source": "response",

"dynamic_variable": "dom_snapshot",

"value_path": "dom_snapshot"

}

],

"expects_response": true,

"response_timeout_secs": 1,

"parameters": [],

"dynamic_variables": {

"dynamic_variable_placeholders": {}

}

}

- Action Tool:

left_clickThis tool allows the agent to perform the most common web action: clicking an element.

{

"type": "client",

"name": "left_click",

"description": "Click on a certain element on the page with the LEFT mouse button. This requires a CSS selector to target the click.",

"disable_interruptions": false,

"force_pre_tool_speech": "auto",

"assignments": [],

"expects_response": false,

"response_timeout_secs": 1,

"parameters": [

{

"id": "selector",

"type": "string",

"value_type": "llm_prompt",

"description": "This is a CSS selector which can be obtained from understanding and retrieving the dom_snapshot",

"dynamic_variable": "",

"constant_value": "",

"enum": null,

"required": true

}

],

"dynamic_variables": {

"dynamic_variable_placeholders": {}

}

}

- Action Tool:

typeThis tool gives the agent the ability to enter text into form fields.

{

"type": "client",

"name": "type",

"description": "Make it possible to fill INPUT fields on the page and complete forms if need. Needs the content and a CSS selector ",

"disable_interruptions": false,

"force_pre_tool_speech": "auto",

"assignments": [],

"expects_response": false,

"response_timeout_secs": 1,

"parameters": [

{

"id": "text",

"type": "string",

"value_type": "llm_prompt",

"description": "The text that needs to put into the input",

"dynamic_variable": "",

"constant_value": "",

"enum": null,

"required": true

},

{

"id": "selector",

"type": "string",

"value_type": "llm_prompt",

"description": "This is a CSS selector which can be obtained from understanding and retrieving the dom_snapshot",

"dynamic_variable": "",

"constant_value": "",

"enum": null,

"required": true

}

],

"dynamic_variables": {

"dynamic_variable_placeholders": {}

}

}

- Action Tool:

relocateThis tool allows the agent to navigate to a new webpage.

{

"type": "client",

"name": "relocate",

"description": "Navigate to a certain page in the current tab by changing its URL.",

"disable_interruptions": false,

"force_pre_tool_speech": "auto",

"assignments": [],

"expects_response": false,

"response_timeout_secs": 1,

"parameters": [

{

"id": "url",

"type": "string",

"value_type": "llm_prompt",

"description": "Defines the target URL to which the agent will navigate. This parameter requires a well-formed, absolute URL containing a valid protocol scheme. Example: https://www.google.com",

"dynamic_variable": "",

"constant_value": "",

"enum": null,

"required": true

}

],

"dynamic_variables": {

"dynamic_variable_placeholders": {}

}

}

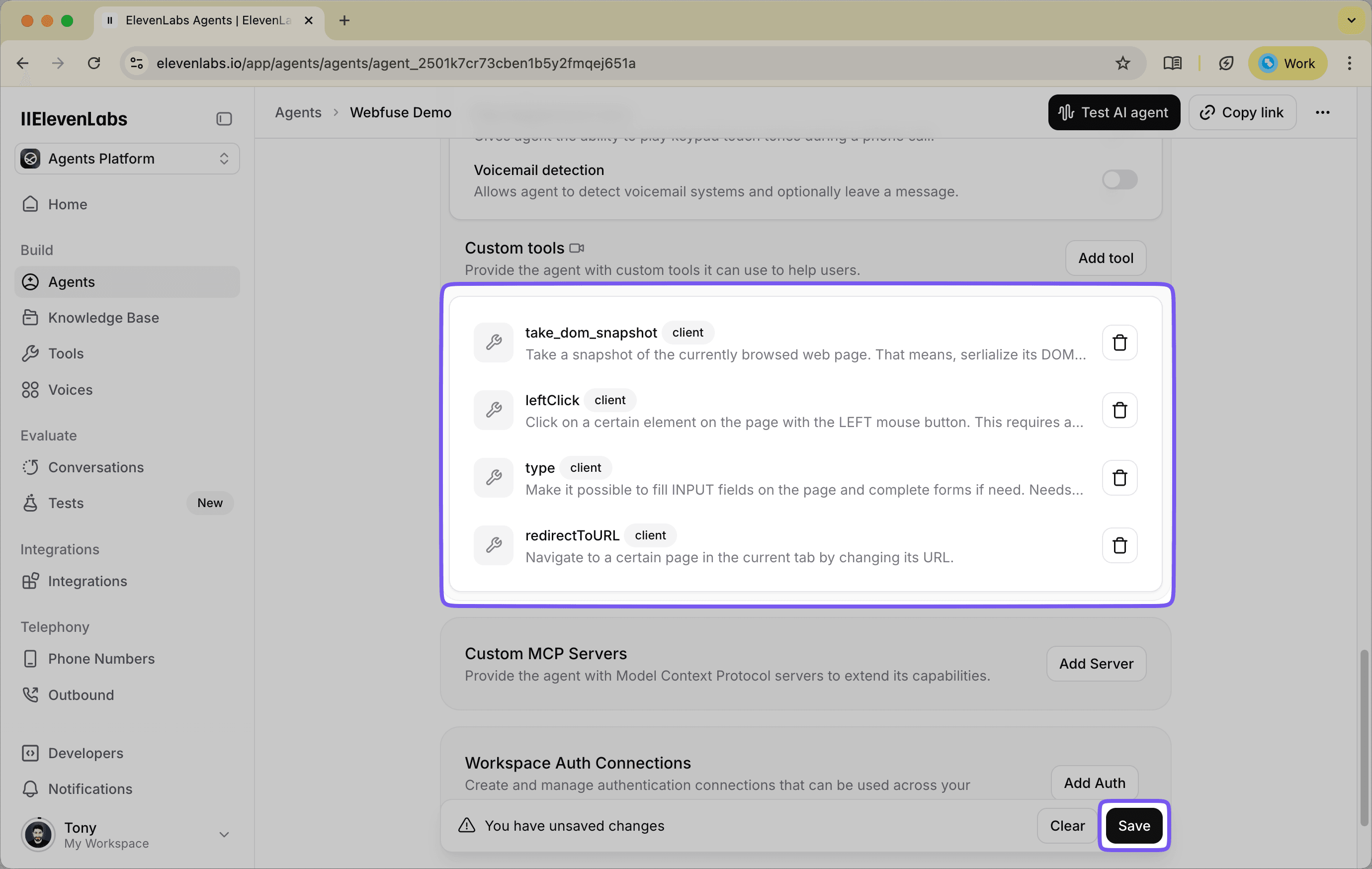

1.4. Save the Agent

Once you have set the instructions and defined all four tools, save your agent. You now have an agent that is conceptually aware of its capabilities. It understands what it can do, but it doesn't yet have the mechanism to execute these actions. The next step is to build the Webfuse Session Extension that will provide the actual implementation for these tool calls.

Step 2: Building the Webfuse Session Extension

With our agent configured in ElevenLabs, we now need to build the client-side component that will receive its commands and execute them. The Webfuse Session Extension is the bridge that connects the agent's abstract tool calls (e.g., "I want to left_click") to the concrete, executable commands of the Webfuse Automation API (e.g., browser.webfuseSession.automation.left_click(...)).

We will create a small package of files that, when uploaded to Webfuse, will run inside the Virtual Web Session.

2.1. Set Up Your Project Folder

Create a new folder on your computer named elevenlabs-extension. Inside this folder, we will create the following files.

Note: The complete source code for this extension is available on GitHub at https://github.com/webfuse-com/elevenlabs-widget-extension. You can clone this repository directly if you prefer to skip the manual setup steps and dive straight into deployment.

2.2. The Manifest File (manifest.json)

Every extension, whether for a browser or Webfuse, requires a manifest file. This file tells the platform about the extension, including its name, version, what scripts and pages it needs to load, and any environment variables.

- Create a file named

manifest.jsonand add the following content:

{

"manifest_version": 3,

"version": "1.0",

"name": "ElevenLabs Voice Agent",

"host_permissions": [

"https://*.elevenlabs.io/*",

"ws://*.elevenlabs.io/*"

],

"content_scripts": [

{

"js": ["content.js"],

"matches": ["<all_urls>"]

}

],

"action": {

"default_popup": "popup.html"

},

"env": [

{

"key": "AGENT_KEY",

"value": "YOUR_AGENT_ID_HERE"

}

]

}

- Replace

YOUR_AGENT_ID_HEREwith the actual Agent ID from the ElevenLabs agent you created in Step 1. You can find this ID in the Voice Lab.

The env section defines environment variables that can be customized directly in the Webfuse Studio interface when you upload the extension. This means you can set or update your agent ID through the UI without needing to modify and re-upload the extension files.

2.3. The Popup Page (popup.html)

This is the HTML file that will be used as the container for the ElevenLabs voice agent widget. It loads the necessary scripts and stylesheets.

- Create a file named

popup.htmland add the following content:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>ElevenLabs Voice Agent</title>

<link rel="stylesheet" href="./popup.css"></link>

<script src="./popup.action.js"></script>

<script src="./popup.agent.js"></script>

<script src="https://unpkg.com/@elevenlabs/convai-widget-embed" async type="text/javascript"></script>

</head>

</html>

2.4. The Popup Styling (popup.css)

This CSS file provides basic styling for the agent widget, positioning it appropriately within the popup window.

- Create a file named

popup.cssand add the following content:

elevenlabs-convai {

transform: translateX(10px) translateY(-10px);

}

This simple transform adjusts the widget's position slightly, providing better visual spacing within the popup container.

2.5. The Popup Configuration (popup.action.js)

This script configures the appearance and behavior of the popup window itself when it opens.

- Create a file named

popup.action.jsand add the following code:

document

.addEventListener("DOMContentLoaded", () => {

browser.browserAction

.setPopupStyles({

backgroundColor: "#ffffff80",

borderRadius: "1rem",

});

browser.browserAction.resizePopup(450, 710);

browser.browserAction.detachPopup();

browser.browserAction.openPopup();

});

This script is responsible for configuring the appearance and behavior of the popup window when it opens. It does the following:

- Sets the popup's visual style: It applies a semi-transparent white background and rounded corners for a modern, polished appearance.

- Resizes the popup: It sets the popup dimensions to 450×710 pixels, providing adequate space for the voice agent interface.

- Detaches the popup: This allows the popup to be dragged and repositioned by the user within the session.

- Opens the popup automatically: The popup will appear as soon as the session loads, making the agent immediately accessible.

2.6. The Core Logic (popup.agent.js)

This JavaScript file defines the client-side implementation of the tools we configured in ElevenLabs and passes them to the agent widget.

- Create a file named

popup.agent.jsand add the following code:

const CLIENT_TOOLS = {

async take_dom_snapshot() {

// First, get a full snapshot of the page

const fullSnapshot = await browser.webfuseSession

.automation

.take_dom_snapshot();

// Check if the snapshot is small enough

// If so, return it as-is. Otherwise, get a downsampled version.

return ((fullSnapshot.length / 4) < 2**15)

? fullSnapshot

: browser.webfuseSession

.automation

.take_dom_snapshot({

modifier: "downsample"

});

},

async left_click({ selector }) {

// Calls the Webfuse API to perform a click on the specified element

// The second parameter (true) ensures the action waits for completion

return browser.webfuseSession

.automation

.left_click(selector, true);

},

async type({ text, selector }) {

// Calls the Webfuse API to type text into the specified element

// The third parameter (true) ensures the action waits for completion

return browser.webfuseSession

.automation

.type(text, selector, true);

},

relocate({ url }) {

// This tool uses a standard Webfuse Session API call to change the page URL

browser.webfuseSession.relocate(url);

}

};

function init() {

// Dynamically create the ElevenLabs widget element

const el = document.createElement("elevenlabs-convai");

// Set the agent ID from the environment variable defined in manifest.json

el.setAttribute("agent-id", browser.webfuseSession.env.AGENT_KEY);

// Add the widget to the page

document.body.appendChild(el);

// Listen for the 'call' event, which is fired when the agent is activated

el.addEventListener("elevenlabs-convai:call", (event) => {

// Inject our CLIENT_TOOLS object into the agent's configuration

event.detail.config.clientTools = CLIENT_TOOLS;

});

}

document.addEventListener("DOMContentLoaded", init);

popup.agent.js is the core logic of the extension. It defines the client-side implementation of the tools we configured in ElevenLabs and passes them to the agent widget.

- Defines the

CLIENT_TOOLSobject: The keys of this object (take_dom_snapshot,left_click, etc.) must exactly match the names of the tools you defined in ElevenLabs. The values are functions that call the corresponding methods on thebrowser.webfuseSession.automationAPI. - Implements intelligent snapshot handling: The

take_dom_snapshottool first attempts to get a full snapshot, then checks its size. If it's too large (which could cause issues with the agent's context window), it automatically requests a downsampled version instead. - Creates the widget dynamically: Rather than hardcoding the widget in HTML, the script creates it programmatically and retrieves the agent ID from the environment variable we defined in the manifest.

- Injects the tools: When a user starts a conversation, it intercepts the call configuration and injects our

CLIENT_TOOLSobject, making them available to the agent.

2.7. The Content Script (content.js)

The manifest file references a content.js file as a content script. For this basic implementation, we don't need any additional logic running on the target webpages themselves—all our agent's functionality is contained within the popup.

- Create an empty file named

content.jsin yourelevenlabs-extensionfolder.

This file is required by the manifest but can remain empty for now. As you expand your agent's capabilities in the future, you might use this file to inject custom behavior directly into the pages the user visits, such as highlighting elements the agent is about to interact with or adding visual feedback during automation.

Step 3: Deploying and Testing Your Agent in Webfuse

With the ElevenLabs agent configured and the Webfuse Session Extension built, the final step is to bring them together in a live environment. We will now create a Webfuse SPACE, upload our extension, and launch a session to test our agent's ability to perceive and control a website.

3.1. Create a Webfuse SPACE

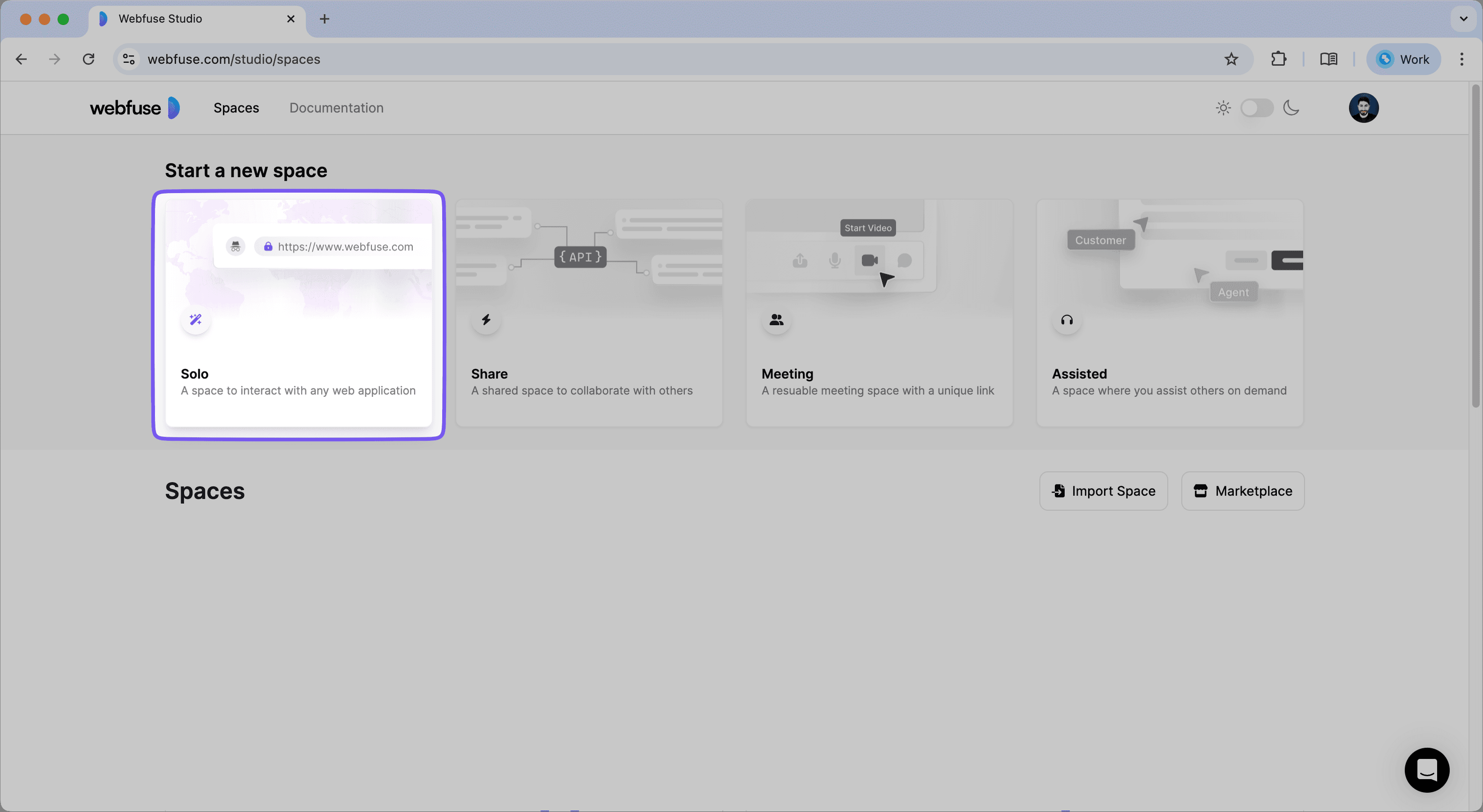

First, log into the Webfuse Studio. A SPACE is the container for your augmented browsing sessions.

- From the main dashboard, create a new SOLO SPACE. A SOLO space is designed for a single user, which is perfect for this use case.

- Give your SPACE a memorable name, such as "ElevenLabs Agent Demo."

3.2. Upload the Session Extension

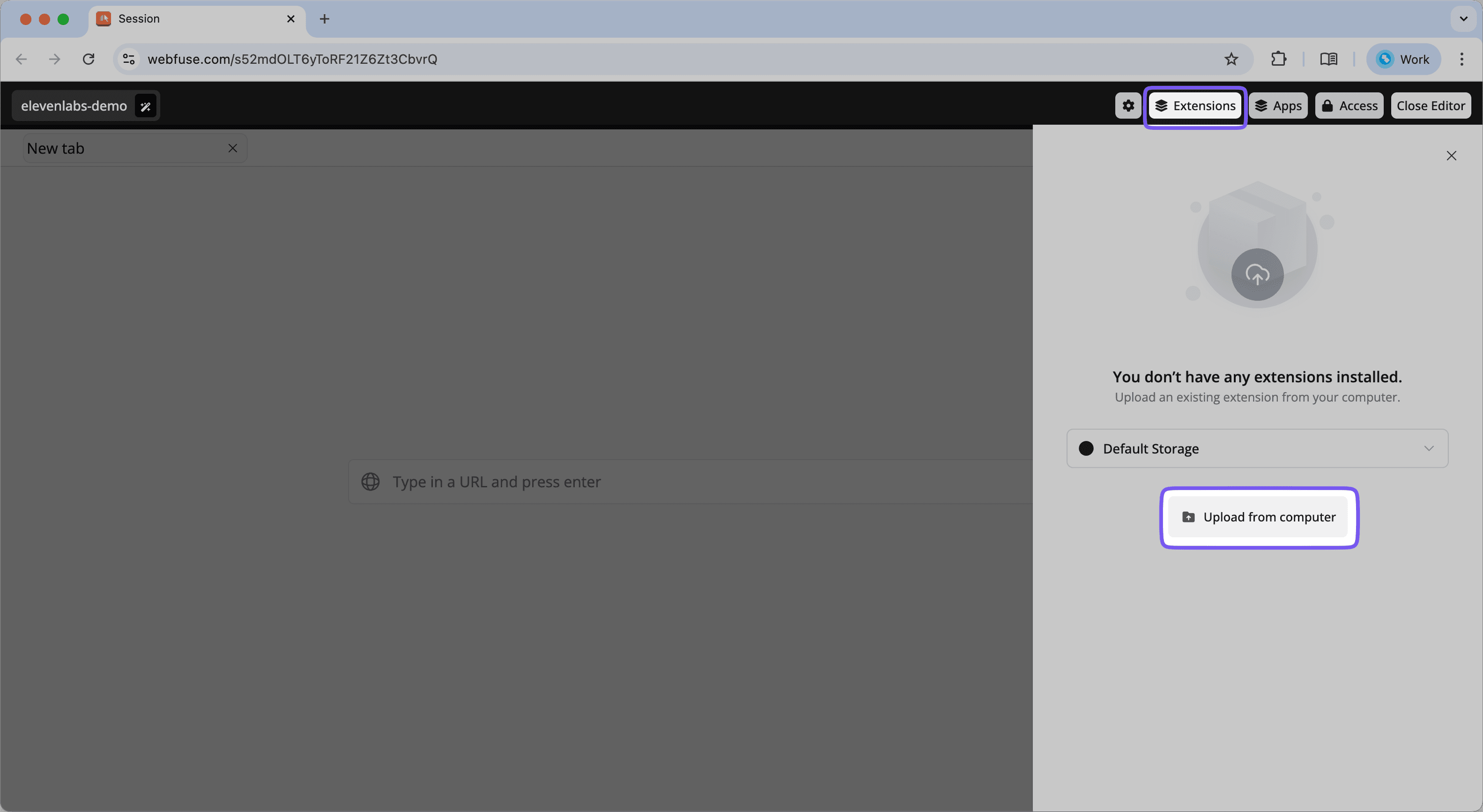

Now we will deploy the extension we just built into our new SPACE.

Note: You must temporarily use Google Chrome to upload extensions to Webfuse.

- Navigate to your newly created SPACE in the Webfuse Studio and open the Session Editor.

- In the editor, find and click on the "Extensions" tab.

- Click the "Upload Extension" button. You will be prompted to select a folder from your computer.

- Select the

elevenlabs-extensionfolder you created in Step 2.

Webfuse will upload and install the extension into your SPACE. It is now configured to load automatically for any session initiated within this SPACE.

3.3. Launch a Session and Interact with Your Agent

The setup is complete. It's time to test our creation.

- Get the SPACE Link: In the main view for your SPACE, you will find its unique SPACE LINK. This is the public URL that launches a session. Copy this link.

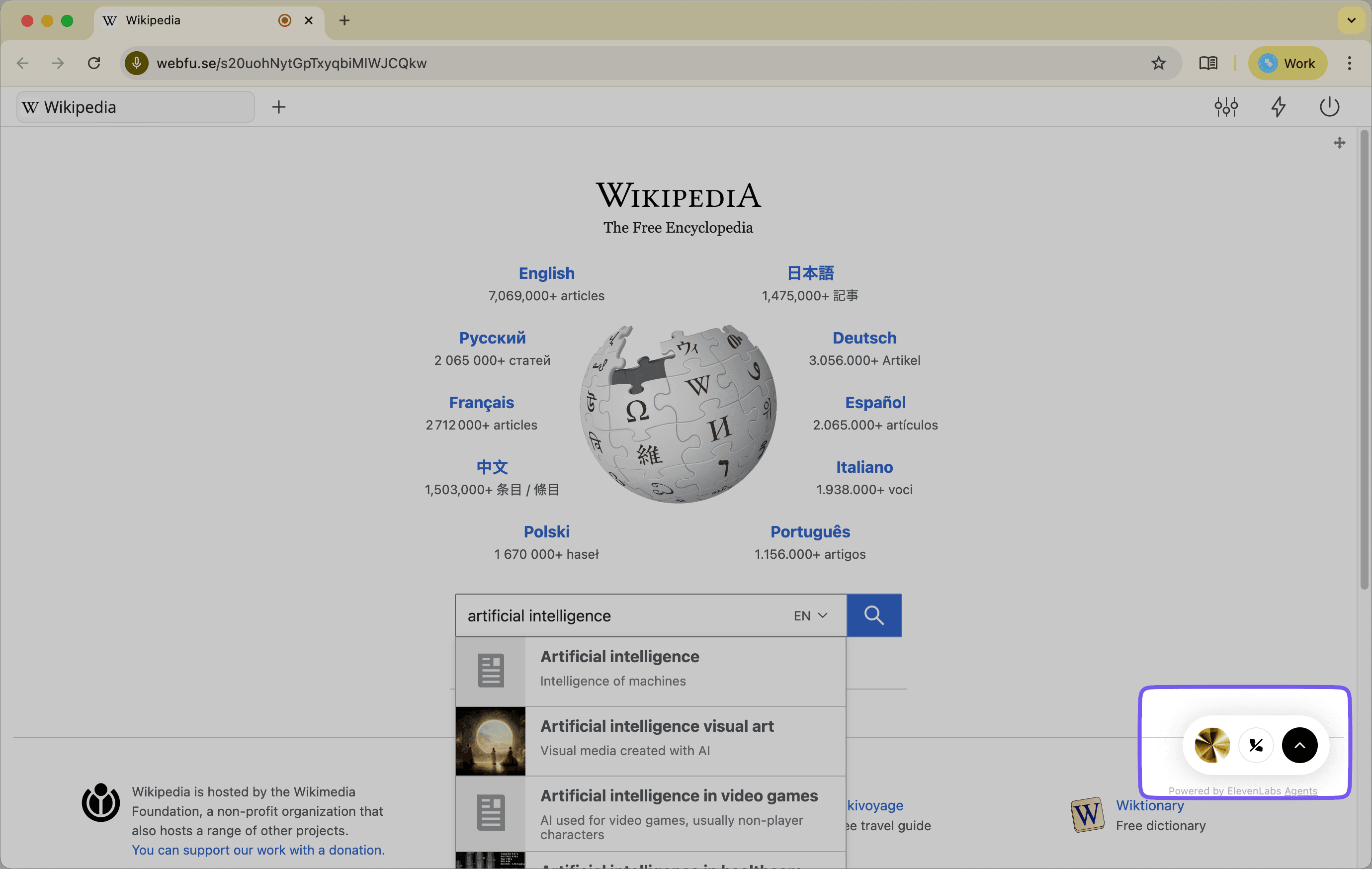

- Start the Session: Open a new browser tab and paste the SPACE LINK. This will start a new Virtual Web Session. By default, it will load a blank "New Tab" page.

- Navigate to a Target Website: Within the virtual session's URL bar, navigate to a well-known website to test on, such as

https://www.wikipedia.org. - Activate the Agent: After a moment, the ElevenLabs voice agent widget and our extension's popup window will appear on the screen. Click the microphone button on the agent widget to begin a conversation.

3.4. Test the Agent's Capabilities

Now, give your agent a series of commands to test its perception and action tools.

- Test Perception:

- Say: "What is on this page?"

- What to expect: The agent will call the

take_dom_snapshottool. The Webfuse Extension will execute the command and send the structured DOM back to the agent. The agent should then be able to summarize the content of the Wikipedia homepage.

- Test Action (Typing and Clicking):

- Say: "Find the search bar and type 'artificial intelligence'."

- What to expect: The agent will analyze the DOM snapshot to find the CSS selector for the search input. It will then call the

typetool. You should see the text "artificial intelligence" appear in the search bar. - Next, say: "Now click the search button."

- What to expect: The agent will find the selector for the search button and call the

left_clicktool. The page should then navigate to the search results.

Congratulations! You have successfully built and deployed a voice agent that can perceive and control a third-party website. It operates directly within the user's live session, providing a seamless and interactive experience. From here, you can expand its capabilities by adding new tools, refining its system prompt, or integrating it into more complex workflows. This prototype serves as a powerful foundation for the future of AI-driven web assistance.

Next Steps: From Prototype to a Polished Assistant

You have successfully built a functional prototype, but this is just the beginning. The architecture you've put in place is highly extensible, allowing you to create far more sophisticated and specialized agents. Here are several ways you can build upon this foundation to create a more robust and polished assistant.

4.1. Refine the System Prompt for Greater Intelligence

The agent's system prompt is its guiding constitution. The more detailed and clear its instructions, the more reliably it will perform.

- Add "Guardrails": Explicitly tell the agent what it should not do. For example, add instructions like, "Do not provide financial advice," or "Do not ask for sensitive personal information like passwords or social security numbers." This is critical for building a safe and trustworthy user experience.

- Provide Task-Specific Examples: If you are designing an agent for a specific workflow (e.g., filling out a life insurance application), you can provide examples within the prompt. Describe the steps and the kind of information it needs to look for on the page. For instance: "When filling out the birthdate, look for separate fields for month, day, and year."

- Improve its Personality: Define a clear personality for the agent. Is it a "polite and professional assistant"? Or a "friendly and encouraging guide"? Adding a "Personality" or "Tone" section to the prompt will make its responses more consistent and engaging.

4.2. Expand the Toolset for More Complex Tasks

The four tools we implemented are the core building blocks, but you can create custom tools for almost any web-based task. Consider adding new tools to your CLIENT_TOOLS object in popup.agent.js and defining them in ElevenLabs:

- A Data Extraction Tool: Create a

getDataFromSelectortool that extracts theinnerTextof an element. The agent could then use this to read information back to the user, such as an order number or account balance. - A Multi-Step Action Tool: For a common workflow, you could combine several actions into a single tool. For example, a

logintool could take a username and password, find the respective fields, type the information, and click the submit button, all in one call. - A Conditional Logic Tool: Create a tool that checks for the existence of an element on the page, like

doesElementExist(selector). The agent could use this to verify if it's on the correct page before proceeding with an action.

4.3. Customize the Agent's Appearance and Behavior

The Session Extension gives you full control over the agent's presentation within the virtual session.

- Style the Popup: The

popup.htmlfile can be enhanced with custom CSS. You can change its size, position, and styling to match your brand. You could even add custom HTML elements to the popup, such as a "Help" button or a list of suggested commands. - Programmatically Control the Popup: The Webfuse Extension API includes methods to control the popup window itself. In your

popup.agent.js, you can add commands likebrowser.browserAction.resizePopup(width, height)to dynamically change its size orbrowser.browserAction.detachPopup()to allow the user to drag it around the screen.

By iteratively improving the agent's instructions, expanding its capabilities with new tools, and refining its user interface, you can transform this working prototype into a powerful, production-ready assistant tailored to your specific business needs.

Conclusion: You've Built an Interactive Co-Pilot

By following this guide, you have successfully assembled and deployed a truly interactive, session-aware voice agent. You have moved beyond the realm of simple conversational chatbots and built a genuine co-pilot for the web—an AI that can not only understand a user's requests but can also perceive their digital environment and act on their behalf in real time.

Let's recap the key architectural components that made this possible:

- The ElevenLabs Voice Agent: This served as the "brain," providing the advanced natural language understanding to interpret user intent and select the appropriate tool for the job.

- The Webfuse Session Extension: This was the crucial bridge, the "nervous system" connecting the agent's brain to its digital hands and eyes. It provided the client-side implementation for the agent's tools.

- The Webfuse Platform: This provided the secure, sandboxed environment—the "body"—where the agent could safely interact with any third-party website without requiring backend integration or end-user installations.

This hands-on process demonstrates that the creation of sophisticated AI assistants is no longer a purely theoretical exercise. The tools and platforms are now available to build practical, powerful solutions that can fundamentally change how users interact with web applications. You now have a working prototype and a clear path forward for customization and enhancement. The foundation is laid; what you build upon it is limited only by the workflows you wish to automate and the experiences you want to create.

For more strategies on deploying voice agents across different industries and use cases, explore our Universal Voice Agent Deployment guide.

Next Steps

Ready to Get Started?

14-day free trial

Stay Updated

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.