TLDR; We built a tool that lets you automate tasks on the web on top of Webfuse and Microsoft Copilot. It can handle internet-based tasks (eg “Book me a flight ticket to Amsterdam next Tuesday”, “Buy a gift for my brother-in-law on Amazon”, “Order me a pizza”, etc) directly in your browser, with no installation required. In this article, I’ll cover our approach, challenges, highlights, and surprises along the way.

Intro

A few weeks ago, my colleagues and I attended a hackathon at Microsoft HQ for their clients and partners. We didn’t have much information about the event’s conditions or rules, but we were excited to showcase the innovative work we’re doing at Surfly.

It turned out that we built a tool aligned with emerging trends in autonomous agents. This timing coincided with Microsoft releasing a set of autonomous agents, Google introducing Gemini 2 for the agentic era, OpenAI unveiling Swarm, and Claude taking agentic AI to the next level with its computer use skills — from moving a mouse cursor around the screen to clicking buttons and typing text using a virtual keyboard.

Typically, AI agents follow a three-step process:

- Determine the goal through a user-specified prompt.

- Figure out how to approach that objective by breaking it down into smaller, simpler subtasks and collecting the needed data.

- Execute tasks making use of any functions they can call or tools they have at their disposal.

To put it simple, we wanted to make our solution understand and act. This is exactly where the power of Webfuse sessions lies. Since we have access to the source code of any web application, we can inject any custom code that can perform both actions.

How it works end-to-end

Before diving into the implementation details, there are two core concepts you need to grasp:

- Webfuse Extensions

- Webfuse Agent

We will focus on Webfuse extensions in the first part of this article and explore Webfuse Agent in the second part.

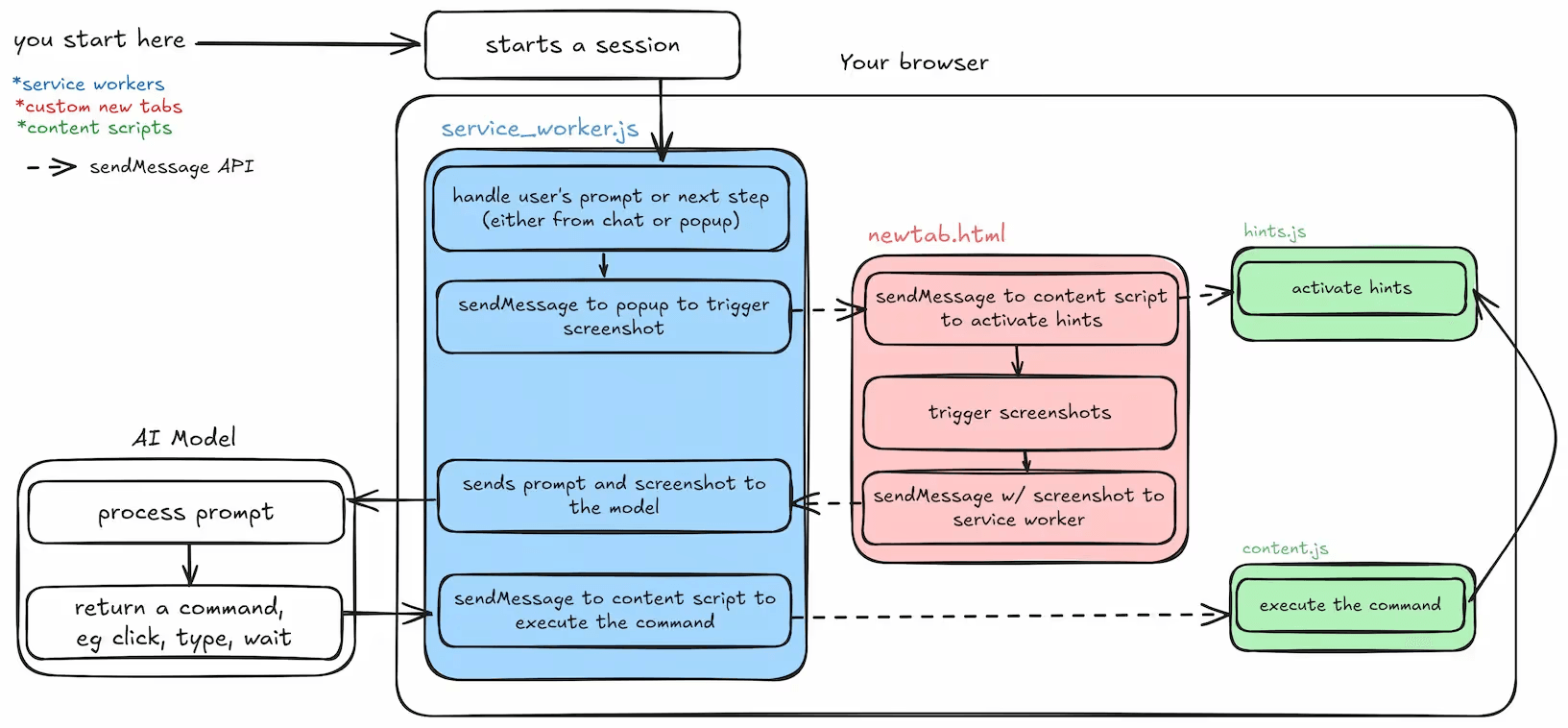

If you’re unfamiliar with Webfuse extensions or just curious to learn more, check out our documentation. In short, a Webfuse extension is browser-agnostic and works seamlessly across platforms, including mobile devices. It mirrors the familiar structure of traditional browser extensions, which makes it easy to pick up if you’ve worked with Chrome extensions before. It includes:

- Content scripts for injecting logic into web pages.

- Action popups for user interactions.

- Service workers to handle background tasks.

- Custom start pages to initialize workflows.

- Communication APIs to enable messaging between components.

The game-changer here is that with a Webfuse session, users are no longer required to install an extension themselves, the full functionality can be packaged under a new Webfuse link which will take care of loading the extension code on top of a predefined target application.

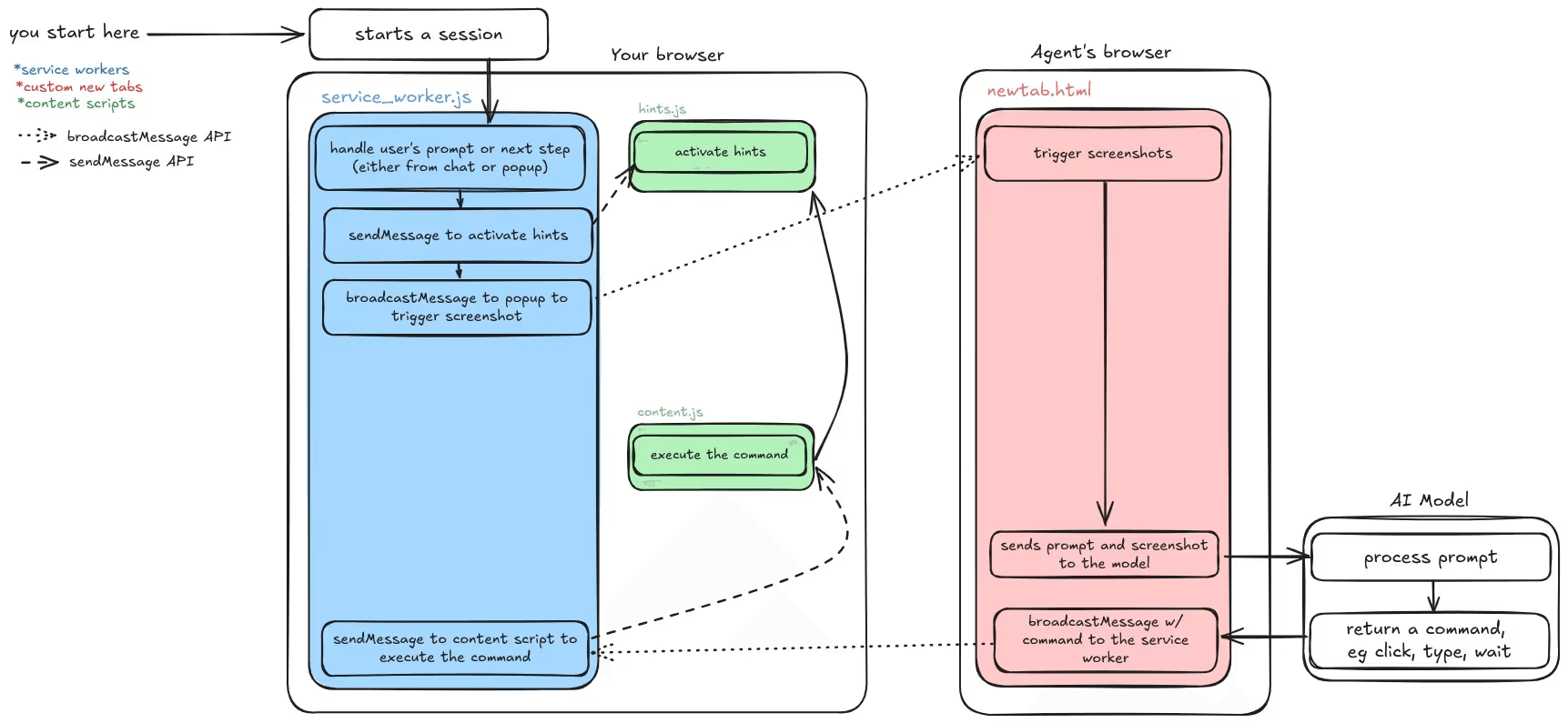

Let’s look at the overview of how Webuse extensions can solve the problem:

Webfuse Extension Components

Now let’s dive into the extension and explore the code. Just like any browser extension, ours starts with a manifest.json file:

{

"manifest_version": 3,

"name": "Next Remote Agent",

"version": "1.0",

"action": {

"default_popup": "popup.html"

},

"background": {

"service_worker": "background.js"

},

"content_scripts": [

{

"js": ["hints.js", "content.js"]

}

],

"chrome_url_overrides": {

"newtab": "newtab.html"

}

}

As you can see, this looks just like any other browser extension’s manifest file. It includes all the components you’d expect — background scripts, content scripts, a popup, and a custom new tab page.

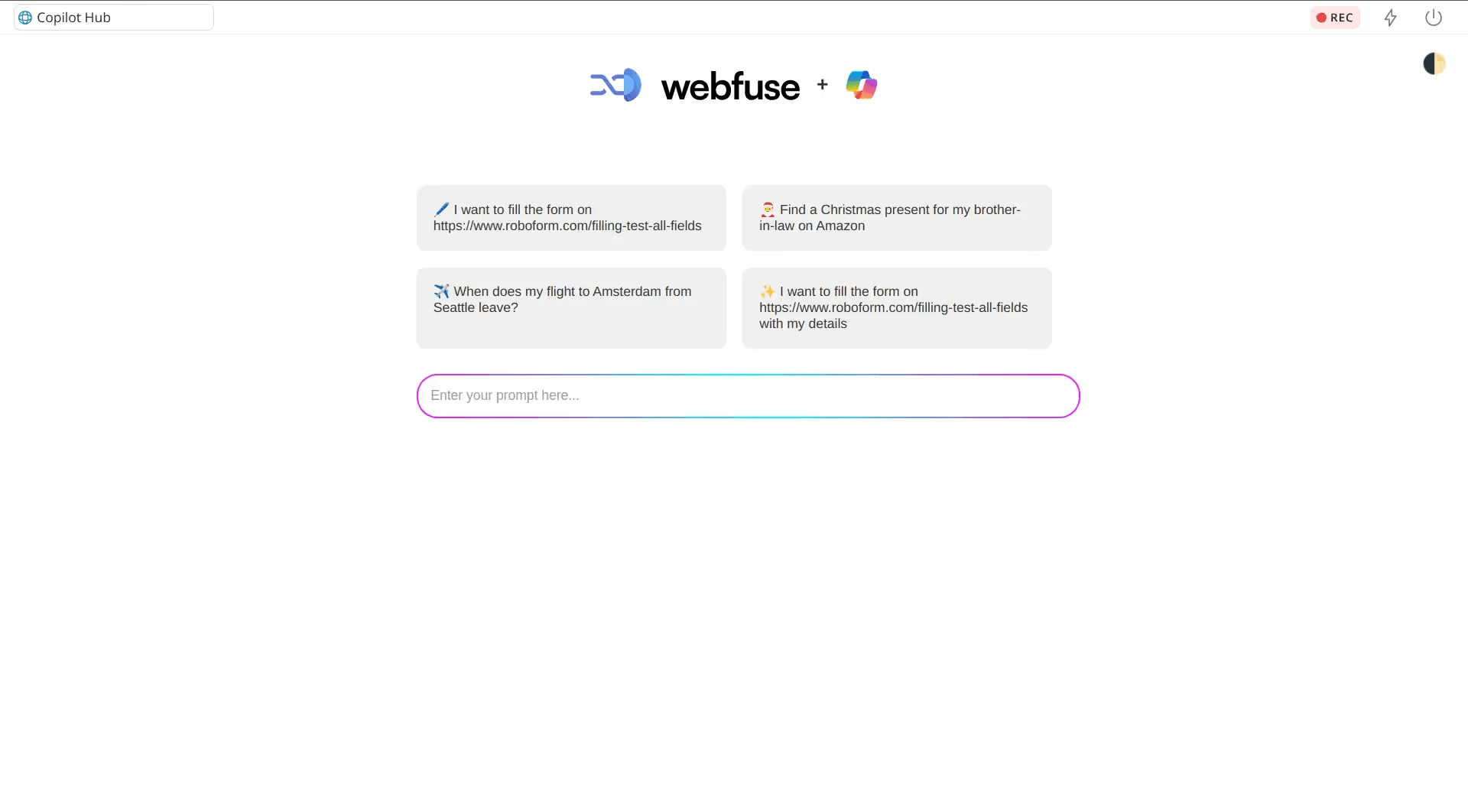

Custom newtab.html: Handling the Initial Prompt

The newtab.html is loaded on the user’s side as the start page. It acts as the user-facing entry point where the process begins. This component is optional, you could also forego this step and start the execution directly on a predefined target website.

What it does:

- Displays a simple HTML form.

- Captures user input (e.g., “Book me a flight”).

- Sends this input to the agent’s API via the Webfuse Message API to initialize the process.

Here’s the code for the start page:

<div class="container">

<div class="cards">

<div class="card">✈️ Book flight tickets to Amsterdam next Tuesday</div>

<div class="card">🎅 Find a Christmas present for my brother-in-law</div>

<!-- Other cards -->

</div>

<form id="prompt-form">

<input type="text" class="prompt-input" placeholder="Enter your prompt here...">

</form>

</div>

<script>

document.addEventListener('DOMContentLoaded', () => {

if (!isAgent) {

// Use Webfuse Agent API to invite agent

}

});

document.querySelectorAll('.card').forEach(card => {

card.addEventListener('click', () => {

document.querySelector('.prompt-input').value = card.textContent;

document.querySelector('.prompt-input').focus();

});

});

document.getElementById('prompt-form').addEventListener('submit', function(e) {

e.preventDefault();

const prompt = this.querySelector('.prompt-input').value;

startWithPrompt(prompt);

this.querySelector('.prompt-input').value = '';

window.location.href = "https://www.google.com";

});

function startWithPrompt(prompt) {

// Use Webfuse Message API to send the prompt

// https://surfly.com/api/schema/swagger-ui/#/Space%20Session/spaces_sessions_message_create

}

</script>

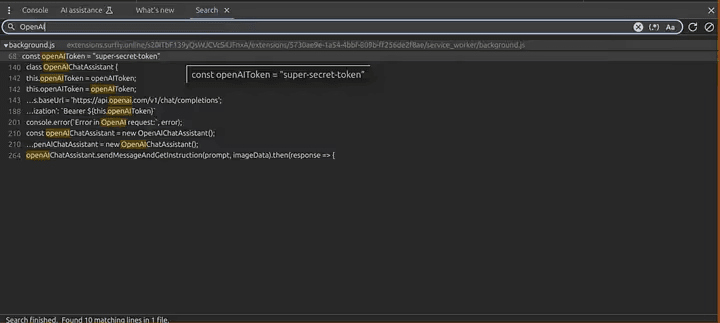

Service worker (background.js): Handle local commands

Extension service workers are an extension’s central event handler. Service worker is executed in a ServiceWorkerGlobalScope; this is basically a special kind of worker context, running off the main script execution thread, with no DOM access.

We will use service worker to initialize communication and propagate commands received from the agent to the tabs and handle them in the content scripts.

let prompt = '';

let autoNext = false;

let sendScreenshots = false;

const openAIToken = "<your Open AI token>"

const systemPrompt = `<see repository for system prompt>`;

class OpenAIChatAssistant {

constructor() {

this.openAIToken = openAIToken;

this.baseUrl = 'https://api.openai.com/v1/chat/completions';

this.chatHistory = [{ role: 'system', content: systemPrompt }];

}

// See source code for full implementation

}

const openAIChatAssistant = new OpenAIChatAssistant();

surflyExtension.surflySession.onMessage.addListener(message => {

console.log('[background.js] message received: ', message);

if (message.event_type === 'chat_message') {

if (message.message[0] === '/') {

const command = message.message.split(' ')[0].slice(1);

const params = message.message.split(' ').slice(1);

switch (command) {

case 'start':

case 'prompt':

case 's':

case 'p':

prompt = params.join(' ');

next();

break;

case 'next':

case 'n':

next();

break;

case 'hints':

case 'h':

surflyExtension.tabs.sendMessage(null, {event_type: 'command', command: 'hints'});

break;

case 'auto':

case 'a':

autoNext = params[0] === 'on';

break;

case 'screenshots':

case 'ss':

sendScreenshots = params[0] === 'on';

break;

case 'answer':

nextAction(params.join(' '));

break;

case 'help':

case 'h':

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Available commands: /start <prompt>, /next, /hints, /auto <on|off>, /screenshots <on|off>, /help`});

break;

}

console.log('[background.js] command: ', command, 'params: ', params);

}

}

});

function next() {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Looking at the webpage..`});

surflyExtension.runtime.sendMessage({event_type: 'capture_screen', sendScreenshots});

}

function nextAction(prompt, imageData) {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Deciding what to do next..`});

openAIChatAssistant.sendMessageAndGetInstruction(prompt, imageData).then(response => {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: ${response.explanation}`});

surflyExtension.tabs.sendMessage(null, {event_type: 'command', ...response});

if (sendScreenshots) {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Adding screenshot to the popup..`});

surflyExtension.runtime.sendMessage({event_type: 'add_screenshot', image_data: imageData, response});

}

if (autoNext) {

setTimeout(() => {

next();

}, 3000);

}

});

}

surflyExtension.runtime.onMessage.addListener((message, sender) => {

console.log('[background.js] message received: ', message);

if (message.event_type === 'screenshot') {

const imageData = message.image_data;

nextAction(prompt, imageData);

}

});

popup.html: Taking screenshots and displaying them for debugging

The popup is the only visible element of the extension. It also has access to the DOM, which is useful, since we need to take screenshots of the current tab.

Here's the popup code:

<div id="screenshots"></div>

<script>

let mediaStream = null;

async function initializeMediaStream() {

try {

const displayMediaOptions = {

preferCurrentTab: true,

};

mediaStream = await navigator.mediaDevices.getDisplayMedia(displayMediaOptions);

} catch (err) {

console.error('Failed to initialize media stream:', err);

}

}

surflyExtension.runtime.onMessage.addListener((message, sender) => {

if (message.event_type === 'capture_screen') {

captureScreen().then(imageData => {

surflyExtension.runtime.sendMessage({event_type: 'screenshot', image_data: imageData});

});

} else if (message.event_type === 'add_screenshot') {

const screenshotElement = document.getElementById('screenshots');

const screenshot = document.createElement('div');

screenshot.innerHTML = `<img src="${message.image_data}" alt="Screenshot" width="640"><p>${JSON.stringify(message.response)}</p>`;

screenshotElement.appendChild(screenshot);

}

});

async function captureScreen() {

if (!mediaStream) {

// If stream was lost/stopped, try to reinitialize

await initializeMediaStream();

if (!mediaStream) {

throw new Error('Failed to initialize media stream');

}

}

const track = mediaStream.getVideoTracks()[0];

const imageCapture = new ImageCapture(track);

try {

const bitmap = await imageCapture.grabFrame();

const canvas = document.createElement('canvas');

canvas.width = bitmap.width;

canvas.height = bitmap.height;

const context = canvas.getContext('2d');

context.drawImage(bitmap, 0, 0, canvas.width, canvas.height);

return canvas.toDataURL('image/png');

} catch (err) {

mediaStream = null;

throw err;

}

}

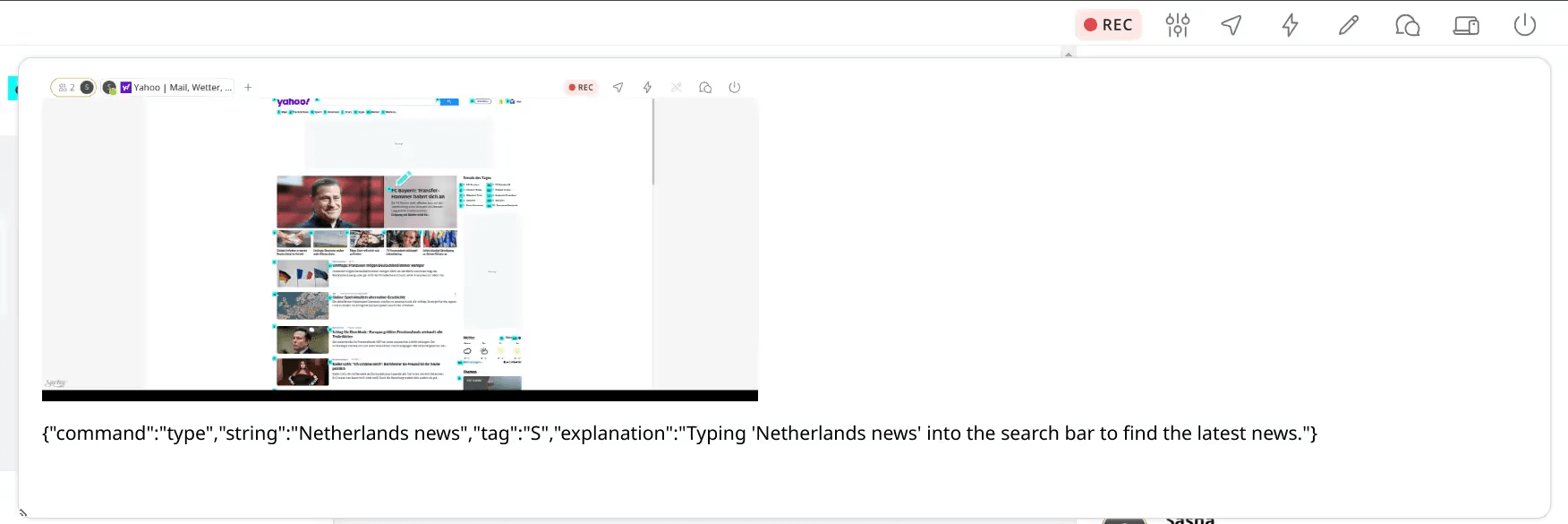

content.js & hints.js: The Content Scripts

The content script runs on the user’s browser and has two primary responsibilities:

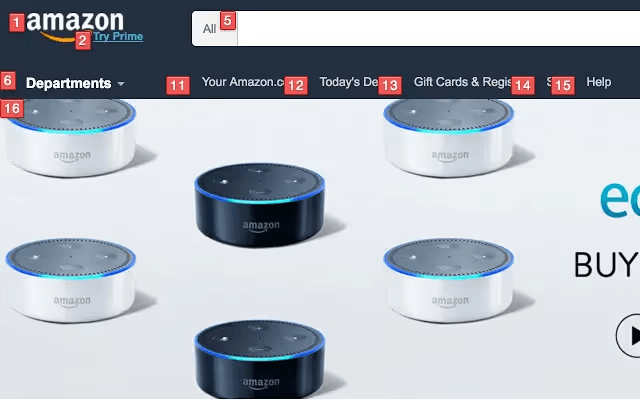

1. Displaying Actionable Hints

We ported KeyJump (a simpler Vimium clone) into Webfuse to display small labels (e.g., a, b, c) over actionable elements like buttons, links, and input fields.

2. Executing Commands

Once the model provides an instruction, a content script injected into the webpage interprets and executes the action. The content script uses JavaScript APIs to perform tasks such as:

- Simulating a keypress for the

clickaction (e.g., pressing "b"). - Focusing on an input element and simulating typing for the

inputaction. - Triggering scrolling behavior for the

scrollaction. - Waiting if a page hasn’t fully loaded. Handling Additional Information:

When additional input is needed (e.g., selecting a payment method), the agent displays a prompt in the popup extension. The user provides the input, which is passed back to the model for further instructions.

addEventListener("DOMContentLoaded", (event) => {

document.body.focus();

setup();

activateHintMode();

const handlers = {

click: (message) => {

const char = message.tag.toLowerCase();

const event = new KeyboardEvent("keydown", {

key: char,

shiftKey: message.shiftKey,

ctrlKey: message.ctrlKey,

altKey: message.altKey,

metaKey: message.metaKey,

});

handleKeydown(event);

},

type: (message) => {

handlers.click({

tag: message.tag.toLowerCase(),

shiftKey: message.shiftKey,

ctrlKey: message.ctrlKey,

altKey: message.altKey,

metaKey: message.metaKey,

});

window.document.activeElement.value = message.string;

window.document.activeElement.dispatchEvent(

new InputEvent("input", { bubbles: true })

);

document.body.focus();

},

navigate: (message) => {

window.location.href = message.url;

},

scroll: (message) => {

window.scrollBy(0, message.y);

},

wait: (message) => {

setTimeout(() => {

activateHintMode();

}, message.time);

},

finish: (message) => {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Finished`});

},

ask: (message) => {

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Question: ${message.question}`});

},

hints: (message) => {

activateHintMode();

},

};

surflyExtension.runtime.onMessage.addListener((message, sender) => {

if (message?.command in handlers) {

try {

handlers[message.command](message);

} catch (error) {

console.error('Error executing command:', error);

}

} else {

console.log("content script: message not found", message);

}

});

surflyExtension.surflySession.apiRequest({cmd: 'send_chat_message', message: `✨ Agent: Hello! Type /help to see available commands.`});

});

What’s exciting is that this isn’t a one-trick pony — Webfuse extensions can be used to build countless other use cases. Whether it’s automating workflows, improving accessibility, or building intelligent assistants, the possibilities are endless.

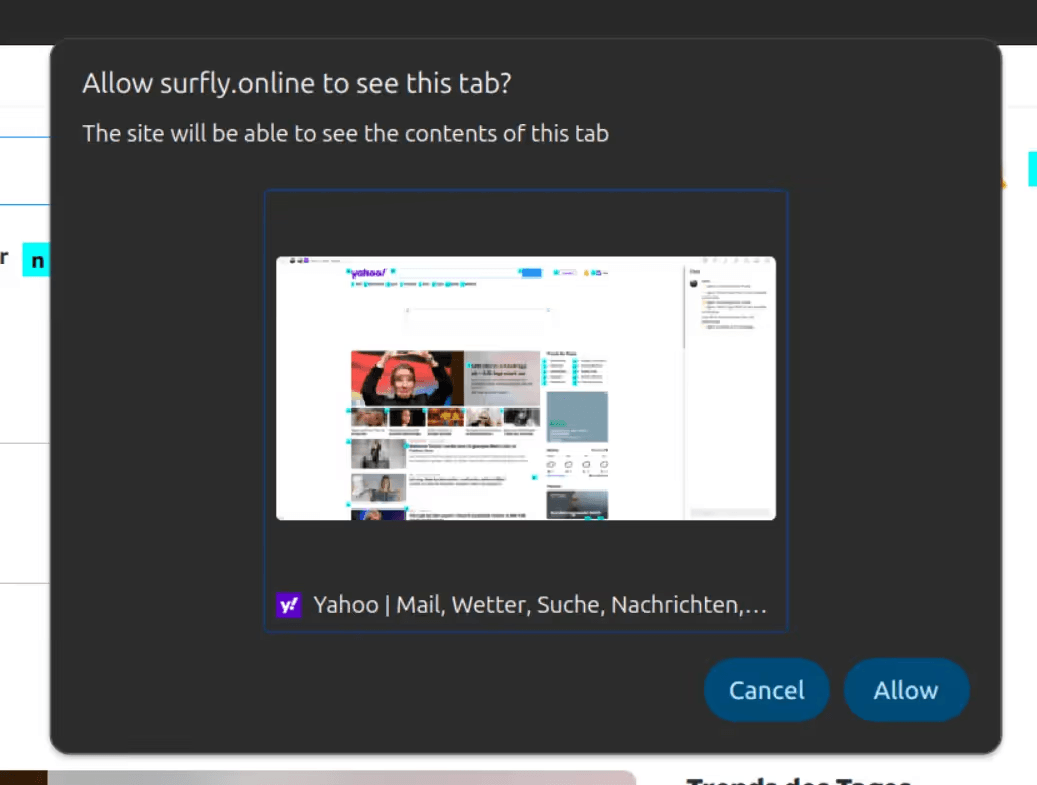

Defining the limitations

While our implementation is functional, it comes with a few challenges:

- Persistent Pop-up — A recurring pop-up appears at the start of each session, requiring an extra click. Worse, it misleadingly states that the user is sharing their tab with Webfuse, which isn’t entirely true.

- Disruptive Hints — Hints are always visible, sometimes covering parts of the page and altering the website experience. This can confuse users, making them unsure whether they’re interacting with the original site.

- API Key Exposure — Since the extension runs in the user’s browser, the OpenAI API key is accessible via developer tools. This is a major security risk, limiting usage to development environments only.

- Cumbersome Interface — We currently rely on a pop-up and a custom start page for user input. However, Webfuse offers powerful APIs — what if we could integrate its chat API for a more seamless, built-in experience?

- Performance Overhead — Running everything in the browser adds extra load. Could we offload some processing elsewhere for better efficiency?

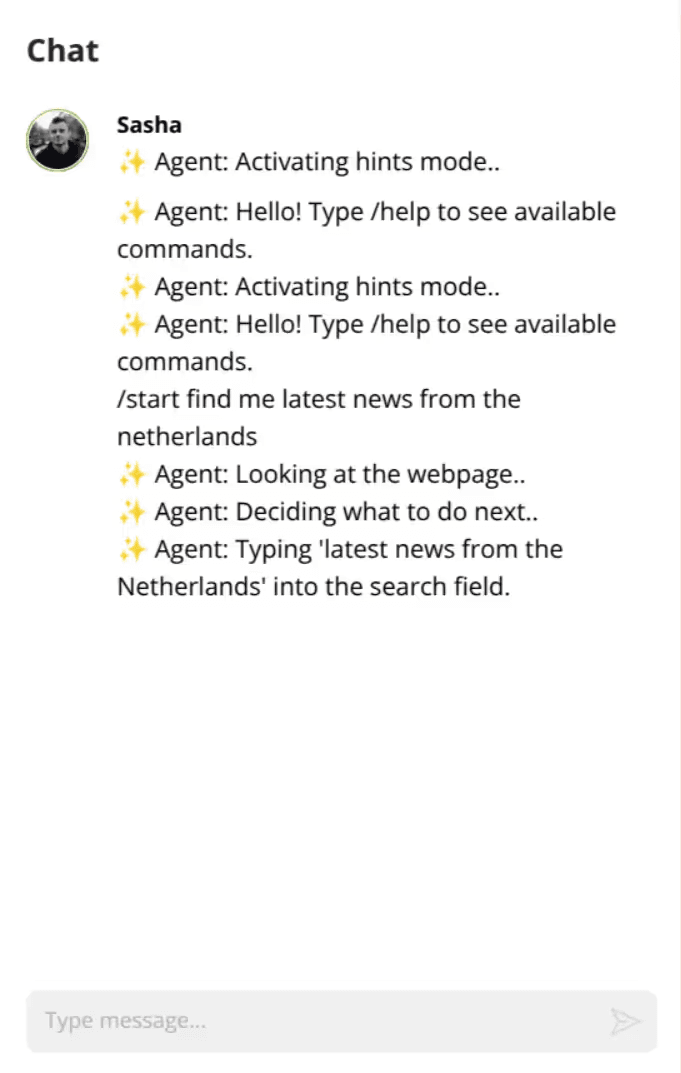

Webfuse Agent (aka Virtual Participant) to the rescue!

Wait a second, who is this Virtual Agent-Participant?The Webfuse Agent introduces the concept of a “hidden participant” — a feature originally designed for recording sessions. However, we took this idea further. The agent is essentially a standard session participant that joins invisibly, loaded with all the necessary extensions. It can “see” and interact with the same session, just like the user does.Can we solve all these limitations with a hidden remote participant seamlessly joining the session? Let’s find out.Here’s what we came up with:

- No More Pop-ups — Instead of capturing screenshots from the user’s browser, we take them from the hidden participant’s side. This eliminates the intrusive pop-up entirely.

- Unobstructed User Experience — Since the agent now handles screenshots, we can also keep hints hidden from the user. The AI still gets the necessary context, while the user enjoys an unaltered, native browsing experience.

- Secured Credentials & Improved Performance — Webfuse provides a special

virtualParticipantOnlyflag inmanifest.json. Enabling this ensures the extension runs only on the remote participant’s side, keeping API keys hidden from the user. This will also reduce the load on the user’s browser. Here is how our updated manifest.json looks like now:

{

"manifest_version": 3,

"name": "Next Remote Agent (Virtual Participant's side)",

"version": "1.0",

"action": {

"default_popup": "popup.html"

},

"background": {

"service_worker": "background.js"

},

"content_scripts": [

{

"js": ["hints.js", "content.js"]

}

],

"virtualParticipantOnly": true

}

- Seamless Chat Control — Instead of relying on pop-ups and custom start pages, we can now leverage Webfuse’s native chat API to manage interactions effortlessly:

Here is a simple code snippet tha demonstrates how to use Webfuse JS API:

function agentMessageHandler(message, sender) {

console.log('[popup.html] agentMessageHandler: message received: ', message);

if (message.event_type === 'chat_message') {

if (message.message[0] === '/') {

const command = message.message.split(' ')[0].slice(1);

const params = message.message.split(' ').slice(1);

switch (command) {

case 'start':

case 'prompt':

case 's':

case 'p':

prompt = params.join(' ');

next();

break;

case 'next':

case 'n':

next();

break;

case 'auto':

case 'a':

autoNext = params[0] === 'on';

break;

case 'screenshots':

case 'ss':

sendScreenshots = params[0] === 'on';

break;

case 'answer':

nextAction(params.join(' '), lastImageData);

break;

}

}

}

}

Final architecture with Virtual Participant

Let's put it all together and see how it works end to end:

How the new version works end-to-end

So why not just use a remote browser?

Remote browser tools like Browse.ai, Browserbase.com or even the new kid on the block Operator from OpenAIoffer powerful capabilities by running entire browser sessions on remote servers. However, for many use cases, this approach introduces significant drawbacks compared to Webfuse’s hybrid model with its remote agent.

1. Native website experience

With remote browsers, users interact with the website through a streamed session, which often leads to:

- Quality Loss: The website is rendered on the remote server, and the user sees a compressed video stream of it. This can degrade the visual fidelity and responsiveness of the website.

- Increased Latency: Every interaction requires a round trip to the remote server, leading to noticeable delays in browsing or performing tasks.

In contrast, Webfuse loads the original website natively in your browser, ensuring a pixel-perfect experience with zero latency. The website behaves exactly as it would in a regular browsing session.

2. Full control over your data

Remote browser tools process all website interactions on their servers, meaning:

- Your browsing data, session cookies, and interaction logs may be stored or processed on remote servers, potentially compromising your privacy.

- You lose control over the data, even if the tool promises not to save it.

With Webfuse, the website and its state remain in your local browser. The remote agent simply joins as a hidden participant to assist with specific operations, such as offloading screenshots or communicating with APIs. Your data never leaves your local environment unless explicitly intended.

3. Flexible and efficient workflow

By maintaining a local-first approach and offloading only heavy operations to the remote agent, Webfuse offers the best of both worlds:

- The performance of a local browser session.

- The additional power of remote resources without compromising control, speed, or privacy.

Summary

Unlike fully remote browser solutions, Webfuse and its remote agent preserve the core browsing experience while enhancing it with remote capabilities. You remain in control of your data, enjoy a seamless browsing experience without latency, and can trust that sensitive operations stay secure within your environment.

Highlights and surprises

- Solving CAPTCHAs: One of the most impressive moments was watching the agent successfully solve a CAPTCHA — something we hadn’t anticipated.

- Effortless Adaptation of Keyjump extension: Porting a browser extension to Webfuse without modifying its code highlighted the flexibility and power of Webfuse.

- Open AI’s Assistant API with GPT 4o can’t read text on images. It took us a few precious hackaton hours to realize this. Still not sure why though!

What’s next?

We’re already brainstorming ways to take this further. Some ideas include:

- Exposing APIs for more granular actions like clicking, focusing, and typing.

- Potentially use coordinates-based approach, similar to Anthrophic Claude’s Computer Use.

- Making extensions cross-compatible with browser extensions

- Handling more edge cases (of which there are plenty!).

Source Code

In this version we run all the code in the user’s browser. The end user will have to give permission to use a media input which produces a MediaStream with tracks containing the requested types of media.

Ready to Get Started?

14-day free trial

Stay Updated

Related Articles

DOM Downsampling for LLM-Based Web Agents

We propose D2Snap – a first-of-its-kind downsampling algorithm for DOMs. D2Snap can be used as a pre-processing technique for DOM snapshots to optimise web agency context quality and token costs.

A Gentle Introduction to AI Agents for the Web

LLMs only recently enabled serviceable web agents: autonomous systems that browse web on behalf of a human. Get started with fundamental methodology, key design challenges, and technological opportunities.